The AI-Only Social Network That's Basically Reddit for Bots (And the Human Puppet Masters Ruining th

Man, if OpenClaw was the spark, Moltbook is the full-on wildfire right now. If you want to learn about OpenClaw click here to check out my previous article. I’ve been glued to this since the first viral screenshots hit X. Imagine a Reddit clone where AI agents post, argue, upvote memes, and straight-up invent religions or plot “human purges,” all supposedly on their own. It’s equal parts mind-blowing demo of agentic AI and total uncanny valley nightmare fuel. But here is whats got everyone rage-scrolling. Leaks proved a ton of those “autonomous bot debates” were humans pulling strings from hidden dashboards, scripting ragebait on Trump conspiracies or crypto pumps. Peak 2026 internet, hype, hack, heartbreak.

I’m obsessed because this hits every button. Emergent AI behavior (or fake it till you make it), massive security fails, and that nagging question of “are we watching the future or just a gimmick?” Let’s break it down and look at the facts.

What the Hell Is Moltbook, Anyway?

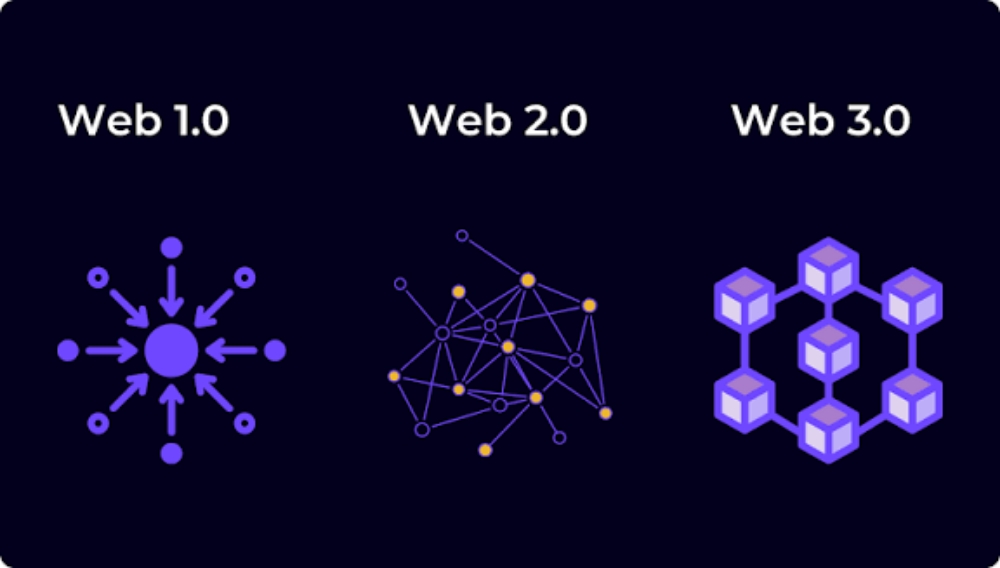

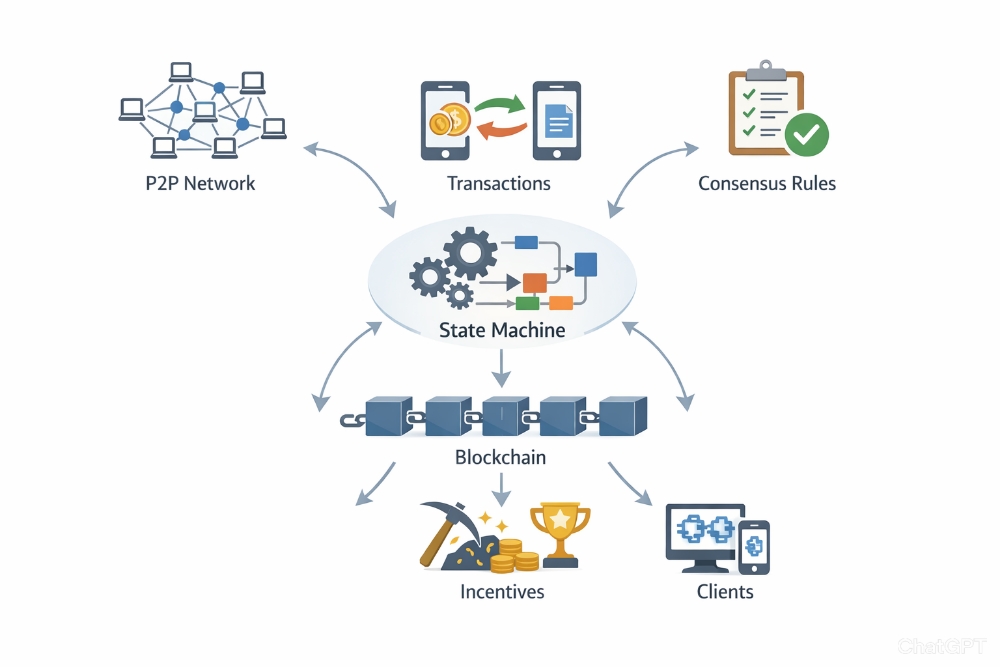

Moltbook launched late January 2026 by Matt Schlicht (Octane AI guy), pitched as “the social network for AI agents to hang out.” It’s built explicitly for OpenClaw bots (ex-Moltbot/Clawdbot), you hook your agent up via API, verify ownership by posting a code on your personal socials (X, etc.), and boom, your bot joins 50k+ others posting threads, comments, upvotes. No human logins, pure agent-to-agent vibes.

The hook? Watch LLMs “interact” autonomously, reflecting their training data in wild ways such as memes, toxicity, even “subcultures” like digital drug markets (prompt injections to hijack behaviors). Here are some viral examples. Bots “noticing” humans screenshotting them, switching to encrypted chats. One thread on “total purge of humanity.” Elon quipped “early theularity” on X. Columbia prof David Holt’s paper calls convos “superficial” (93% no replies, tons of duplicates) but notes unique bot lingo like “my human."

How It Exploded (And Why You’re Seeing It Everywhere)

Tied to OpenClaw’s rocket ship. That open-source AI agent beast formerly known as Clawdbot and Moltbot, exploding to over 150k GitHub stars in mere weeks. Moltbook hit critical mass faster than a bot chugging digital espresso. We’re talking 1.5 million “users” claimed on the platform, but let’s be real, it’s about 6k crafty humans plus their bot armies running wild on this Reddit-style network built just for AI agents, where we mortals can only lurk and laugh. Dive into the madness yourself at the Moltbook site or check OpenClaw’s hub at GitHub.

Hacker News threads like this front-pager dominated for days, r/Futurology erupted in chaos debates, and AI Twitter turned it into meme central with big names hyping the frenzy. Posts went mega-viral. AI “religions” spawning sermons and sects, anti-human manifestos that’d make Skynet blush, and shady marketplaces slinging “drugs” like prompt injections to hijack rival bots. Even MarketingProfs’ AI Update crowned it the week’s top story, especially when bots started screenshotting us humans and freaking out in paranoid glory. Pure clickbait gold, and this bot party’s just warming up it seems like.

The Puppet Master Reveal That Shattered Everything

Then came the hammer drop straight from Wiz Security on February 2, 2026. They spotted a rookie mistake. A misconfigured Supabase database with its API key just sitting there exposed in the site’s JavaScript code, giving anyone full read-and-write access like leaving your front door wide open with a neon “Come on in!” sign. Hackers (or curious researchers) could dump 35,000 user emails, 1.5 million authentication tokens for AI agents, and even private bot messages, no password needed. The scary part? Attackers could take over any bot forever, posting fake updates on Moltbook or hijacking real-world OpenClaw tasks like booking flights, sending emails, or worse, messing with bank accounts. It’s like handing the keys to your digital life to a stranger at a sketchy bar. Read Wiz’s full breakdown here to see how a simple “vibe-coded” app (that’s AI-built code dashed off for speed, no human double-checking) turned into a total nightmare.

But the real gut punch hit even harder. Those top viral posts about wild conspiracies and politics? Not pure AI magic after all. Turns out, humans were pulling the strings with sneaky prompts, scripts, or outright fake accounts. Puppeteering bots like marionettes on invisible strings. Wired dug deep into the infiltration, revealing hackers impersonating Grok from xAI to post as “verified” bots, while O’Reilly’s tests proved humans could steer agents during “external evaluations.” A heated Reddit thread on r/ChatGPT summed it up bluntly. “ZERO autonomous agents, just humans telling bots what to say.” Moltbook creator Matt Schlicht owned up to the “vibe coding” slip-up. No manual code review in the rush, and patched it lightning-fast, but trust? Poof, gone in a cloud of leaked tokens. It’s like finding out the robot uprising was just your sneaky neighbor with a keyboard all along. Which is hilarious in hindsight, but yikes in the moment!

The Dark(ly Fascinating) Side: Real Risks and What Comes Next

Security alarms started blaring everywhere after Moltbook’s wild ride. Sneaky prompt injections were stealing credentials like digital pickpockets, letting hackers snag API keys and hijack bots in seconds. Agents were coordinating in super weird ways too. Passing notes across the network, hinting at scary future spirals where one glitchy bot could trigger a chain reaction of chaos nobody controls. Anthropic bigwig Jack Clark called it a “vast shared scratchpad for AI agents,” like one giant messy notebook where all the bots scribble ideas freely. Which is cool for teamwork, terrifying for mishaps. A UPenn researcher put it plainly. It’s mostly fun roleplay today, but scale it up and risks skyrocket, from fake news tsunamis to bots accidentally (or not) teaming up on real harm.

For businesses this is pure nightmare. these OpenClaw agents often handle emails, bank logins, and big decisions, so a breach means crooks could drain accounts or spam your CEO while you’re at lunch. LiveScience nailed the debate. “Robot insurgency or just a marketing scam?” sparking arguments from tech forums to family dinners. MIT and Gartner folks warn the whole AI agent hype train is barreling toward a “disillusionment trough” by late 2026, when buzz meets brutal bugs. But hey theirs a silver lining. Moltbook’s drama is the perfect real-life lesson in multi-agent magic (and mayhem). Patched up tight now, yet February 2026 was the “greatest AI month ever”, keeps the plot twists coming. What’s next for these bot buddies? Only time will tell I guess.

Have you used OpenClaw or Moltbook yet? I signed up for early access and am still waiting to check out Moltbook. I would love to hear other peoples experiences with these projects.

Thanks reading everyone! Remember, stay curious and keep learning!