How Data Centers Are Stealing Your Next Phone, Car, and PC (And It's Not Fixing Anytime Soon)

I’ve noticed that the chapter GIFs I create have been loading a bit slowly on the Medium and BULB apps. To ensure you have the smoothest reading experience possible, I’ll be switching to high-quality static images for my chapters moving forward. I will still use a couple throughout the article but not for every chapter. I have already made the ones for this article but after this I will switch it up. It’s a reminder that even after years of writing, there’s always something new to learn about how our work translates across different formats. I truly appreciate you all sticking with me as I continue to grow and evolve. Now, let’s dive in.

If you aren’t sweating the AI memory chip shortage yet, you’re missing the red flags, and they are screaming. From Bloomberg alerts and late-night Reddit threads to CNBC deep dives and LinkedIn warnings, the signal is clear. Things are about to get much worse. We are standing in the center of a perfect storm where hyperscaler greed, massive fab line pivots, and a relentless hunger for AI are colliding. This isn’t just a temporary glitch either. It’s a total market overhaul that’s essentially guaranteeing skyrocketing prices and empty shelves well into 2027. If you want to know why your next tech upgrade just became a luxury item, keep reading.

So, What Exactly Is This Memory Chip Crunch?

The AI memory chip shortage (which has been brewing since late 2025 and is hitting crisis mode in early 2026) is essentially a supply-demand apocalypse centered on high-bandwidth memory (HBM) and DRAM. Think Nvidia Blackwell GPUs, which pack 192GB HBM3e per chip, or a single NVL72 rack with 13.4TB, which is roughly enough HBM for 1,000 high-end smartphones. Hyperscalers like Google, OpenAI, Meta, and Amazon are buying millions of these accelerators, and analysts expect data centers to consume around 70% of all high‑end memory chips in 2026. Samsung, SK Hynix, and Micron responded by executing a massive fab line pivot, retooling 23% of their DRAM wafers for HBM production (up from 19% last year), starving consumer lines for PCs, phones, and cars. Instead of you getting affordable upgrades, AI training/inference gets priority while everyday DRAM and NAND prices are surging, in some cases doubling on contracts, and lead times are stretching into many months.

It runs on the edge of capacity limits, which means you aren’t seeing new gadgets stack shelves just to watch them sit empty. That “AI-first power grab” vibe has hit a nerve with consumers, gamers, and auto makers who want their tech without handing the keys to Jensen Huang’s empire. The numbers tell the true story. Synopsys CEO Sassine Ghazi has been very open about the fact that this shortage lasts through 2027, but that hasn’t slowed down the capex stampede at all.

Under the hood, the crunch is all about HBM’s “high bandwidth” edge. It adds massive parallel access so AI models can stream huge datasets without bottlenecks, plug-in style stacks for next-gen GPUs, support for HBM4 ramps, and cross-device ripple effects through everything from EVs to laptops. It’s meant to feel like the fuel for AGI dreams that doesn’t just suggest faster compute but actually enables it, and then hits your wallet when something important delays.

How It Blew Up: Hyperscalers, Warnings, and a Whole Lot of Price Drama

The reason the AI memory shortage is such a magnet for attention isn’t just the tech specs. It’s that the whole story around it is perfectly tuned for market drama. One of the biggest accelerants is the hyperscaler capex explosion. Alphabet and Amazon are each dropping $185B–$200B this year alone, absolute records that’ll make your head spin. The moment people realized AI racks need HBM equivalent to thousands of phones, the headlines wrote themselves. It feels like a tiny slice of the compute arms race, which is both fascinating and wallet-draining.

That alone would have been enough to ride the AI boom wave that’s been building, but of course the market said, “Let’s make it spicier.” Nvidia reportedly plans no new gaming GPUs in 2026, pumping RTX 50 production cuts before execs had to publicly scramble for more supply. People mistook HBM bets for consumer wins, loaded up on stocks, and then watched DRAM prices tank margins. Some investors treated HBM hype like an automatic consumer win, only to see memory price spikes squeeze margins instead. It was textbook supply chaos and added another layer of spectacle to an already strained chain. Timing also helped. February 2026 is already being called one of the craziest months in AI with government AI rules and headline‑grabbing ‘agent’ launches.

A major driver behind this is the Stargate Project, a massive $500 billion joint venture between OpenAI, SoftBank, and Oracle that was officially unveiled at the White House. This project alone aims to build ‘gigawatt-scale’ data centers across the U.S., essentially pre-ordering the very memory chips that are now vanishing from the consumer market.

For the architects of this project, the goal isn’t just about faster chips. It’s about survival in the AI race. As Sam Altman put it during the expansion announcement.

“AI can only fulfill its promise if we build the compute to power it. That compute is the key to ensuring everyone can benefit from AI and to unlocking future breakthroughs.” — Sam Altman, CEO of OpenAI

While fabs were unveiling HBM4 prototypes and vendors pitching safe scaling, the shortage grabbed attention on the opposite end. Raw, real-world limits, without the hype polish, exposing both the boom and the bust.

What People Actually Use It For (When They’re Not Just Hoarding Stacks)

Strip away the charts and forecasts, and you’re left with a simple, high-stakes reality. HBM isn’t just “more” memory, it’s the high-octane fuel for the stuff Big Tech is obsessed with. Labs aren’t just using it for basic tasks — they are wiring it into training runs for trillion-parameter models that would choke a normal PC. They’re building inference farms that can query massive datasets (we’re talking exabytes) without that annoying lag we see in cheaper tools.

Others are bolting this stacked memory onto NVIDIA Blackwell chips for what they call frontier research. This is where the AI crunches through mountains of data just to spit out a summary, all while juggling context windows so large they’d make a standard server melt. It’s powerful, it’s fast, and it’s exactly why your next upgrade is currently stuck in a data center somewhere else.

The secret sauce here is that HBM is built with stacked dies. Think of it like building a skyscraper instead of a sprawling ranch house; you can keep adding floors (bandwidth) to scale up without taking up more space on the map. This is exactly what’s fueling the fab line pivot. Once these AI frameworks get a taste of that speed, the community starts throwing every heavy workload at it. It even clears the path for next-gen tech like HBM4, which packs even more ‘denser power,’ proving this isn’t just a flash-in-the-pan for Gen AI.

For the labs using it, HBM feels like owning a supercomputer that never hits a speed limit. Because of that massive VRAM, the AI can ‘remember’ huge amounts of context over time instead of constantly resetting. But there’s a catch. This isn’t something you can just flip a switch on in a factory. Even Nvidia’s Jensen Huang has warned that their partners at TSMC need to work very hard just to keep up. It’s a total scramble for power, and unfortunately, the average consumer is the one feeling the squeeze.

The Dark Corners: Consumer Shortages, Auto Delays, and “Chip Wall” Warnings

Now for the part that makes execs sweat and headlines pop. The very thing that makes HBM exciting (massive bandwidth for AI) is exactly what makes it risky when supply lags. Bloomberg and others point out the trifecta of pain: AI gobble, fab pivots, consumer ripple. Data centers have priority access, fabs blindly shift wafers, and execute production cuts on your behalf. That’s a dream for Nvidia if they can lock supply.

Teams have already flagged gaming cuts, automakers warning of higher costs and production constraints, and phone price hikes. Synopsys CEO warns shortage through 2027, with most capacity locked up in long‑term contracts for AI data centers, leaving other product lines scrambling for what’s left. Fortune notes exposed consumer lines on open markets. Poor configs allow shortages to cascade.

Execs acknowledge and vow ramps with new fabs. But teams wave red flags, calling AI bets a consumer nightmare if rushes ignore balance. That tension is why it’s irresistible. “This powers AGI or kills gadgets” energy.

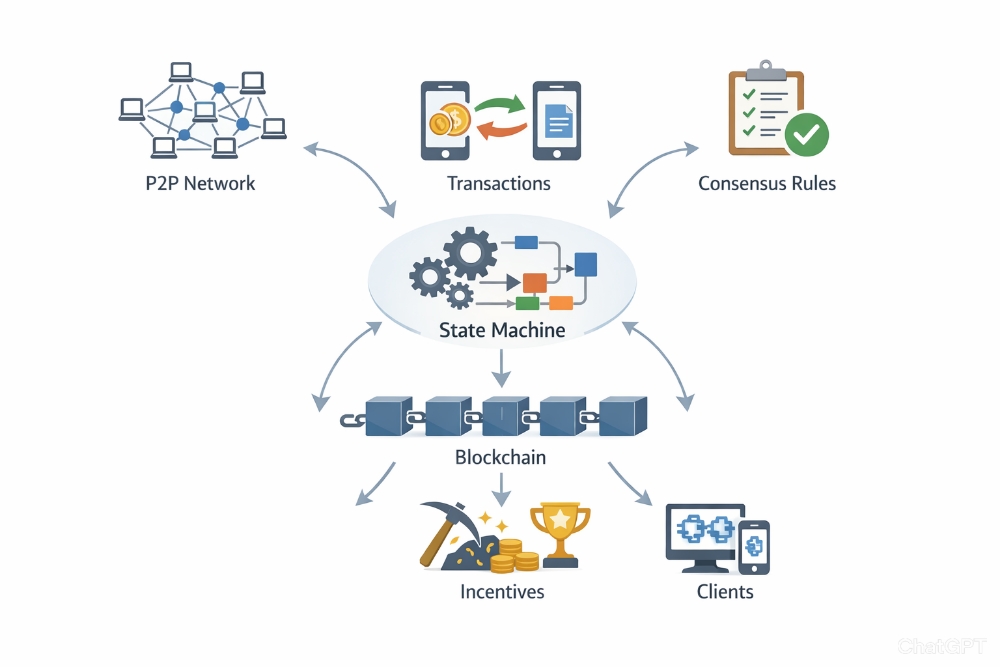

Where the Memory Crunch Fits in the Bigger AI Supply Chain Wave

The shortage isn’t happening in some isolated bubble, it’s the ugly symptom of the whole AI supply chain flipping upside down. We’ve shifted from obsessing over GPUs to slamming straight into memory walls, where data centers are projected to gobble up around 70% of all high-end memory chips made in 2026. Nvidia’s doubling down on enterprise racks and managed fleets for hyperscalers, leaving the fabs in a permanent reallocation mode toward HBM and DRAM for AI workloads.

Analysts at Bloomberg are calling it out loud, the AI boom is massive, but it’s fueling a “chip wall” that could derail everything if supply doesn’t catch up. It’s the classic story of overpromise the AGI dreams, then hit hard limits when models want to scale but starve for bandwidth. That raw, unpolished reality is why everyone’s buzzing about this crunch right now. No hype filter, just the cold truth of exploding capex (we’re talking $650 billion combined from hyperscalers this year) clashing with real-world physics.

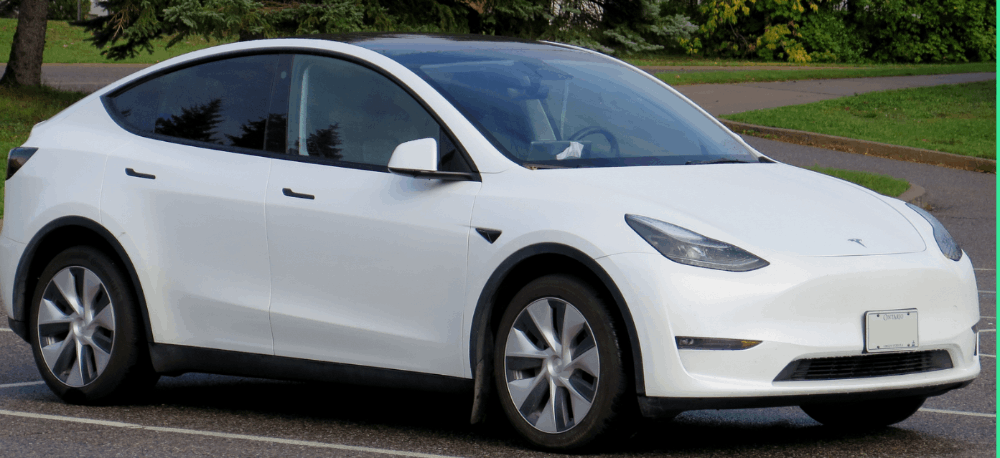

And here’s the kicker for us regular folks, this isn’t abstract. It’s why your next phone might cost an extra $100 (or more), your PC upgrade sits delayed on a shelf, and even carmakers like Tesla are sweating higher prices and production hiccups. Synopsys’ CEO Sassine Ghazi laid it out bluntly. This memory crunch rolls on through 2026 and 2027, with AI infrastructure hogging the lion’s share of capacity. New fabs are coming online, sure, but don’t hold your breath for relief anytime soon. By the time they ramp, the AI arms race will have already moved the goalposts to HBM4 and beyond.

Final Thoughts

Thanks for reading everyone! Have you started feeling those sneaky price hikes on gadgets yet, or noticed your favorite PC parts vanishing from stock? Drop your stories in the comments. I’m dying to hear if your wallet’s taken a hit already.

Remember, stay curious, keep learning, and maybe start stocking up on that RAM before the data centers swipe the last bits.

![[LIVE] BULB Ambassador Program: Empowering the Future of Web3 Creators](https://cdn.bulbapp.io/frontend/images/952f29cd-a8d5-47aa-8e9f-78a1255f8675/1)