Horizontal vs Vertical scaling

Horizontal and vertical scaling are two fundamental strategies used in computing and technology for managing increasing workloads and improving performance and capacity. Here's a brief overview of each:

- Horizontal Scaling (Scaling Out/In):

- Definition: Horizontal scaling involves adding more nodes (servers or machines) to a system to distribute the workload more evenly. This can include adding more servers to a web server cluster or more nodes to a database cluster.

- Advantages: It offers high availability and redundancy since the system can remain functional even if one or more nodes fail. It's also more flexible, as you can add or remove resources as needed.

- Disadvantages: More complex in terms of network management and data consistency. Also, not all applications or databases can easily scale horizontally.

- Use Cases: Web applications, cloud computing, and databases that support distributed architectures (like NoSQL databases).

- Vertical Scaling (Scaling Up/Down):

- Definition: Vertical scaling involves increasing the capacity of an existing machine or node by adding more resources like CPU, RAM, or storage. This is akin to upgrading the hardware of an existing server.

- Advantages: Simpler than horizontal scaling as it involves fewer physical or virtual machines. It also avoids the complexity of distributed systems.

- Disadvantages: There's a limit to how much you can scale a single machine. Also, it often involves downtime when upgrading hardware, and there's a single point of failure.

- Use Cases: Applications with limitations on distributed computing or where high computing power is needed on a single node.

In practice, many modern systems use a combination of both horizontal and vertical scaling to balance the benefits and drawbacks of each approach. This allows for more efficient and resilient infrastructure, especially in environments with variable workloads and demands.

When scaling horizontally, it's essential to adopt a back-end architecture that supports such scalability effectively. The key is to use architectures that allow you to add more machines or resources without causing disruption or significant changes to the system. Here are some common architectural patterns and considerations for horizontal scaling:

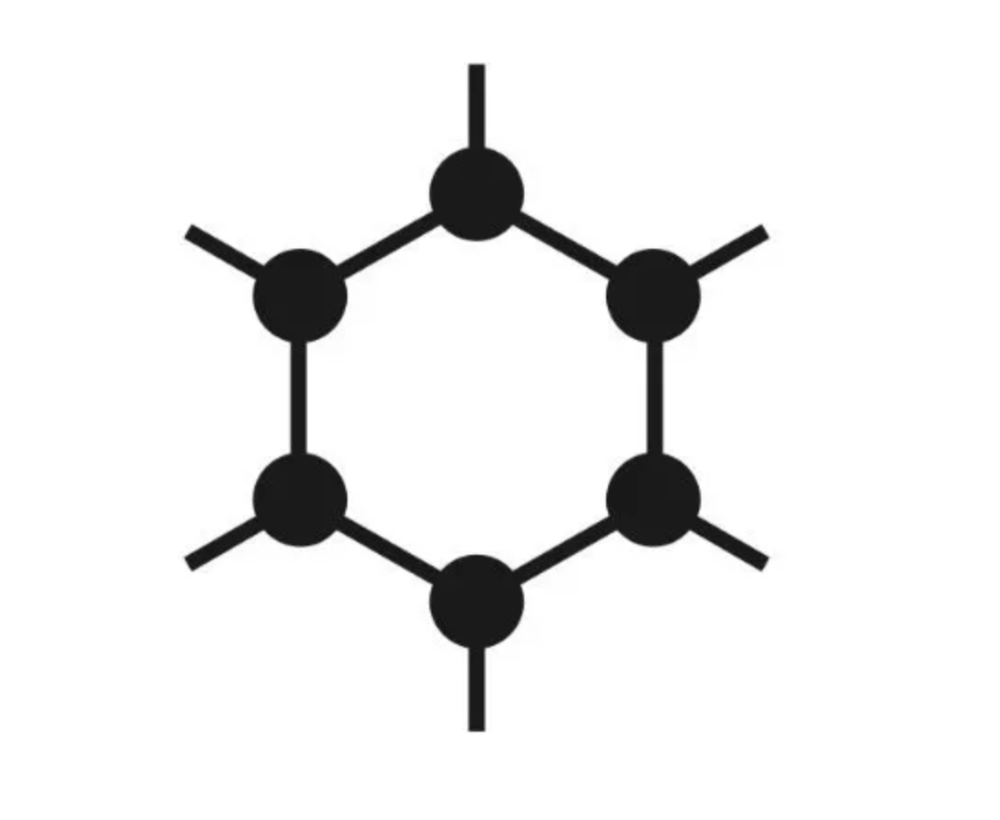

- Microservices Architecture:

- In a microservices architecture, the application is divided into a collection of smaller, independent services. Each service runs in its own process and communicates with other services through a well-defined interface using lightweight mechanisms, often an HTTP-based API.

- This approach allows for individual services to be scaled independently, depending on their specific resource requirements.

- Stateless Architecture:

- Stateless applications do not save any client state on the server. This approach allows any server to respond to any request at any time, making it easier to distribute the load across multiple servers.

- In stateless architectures, any session or state data is stored on the client or in a central data store that all nodes can access (like a database or a cache).

- Load Balancing:

- Load balancers distribute incoming application traffic across multiple servers to ensure no single server bears too much demand.

- They can route requests based on various factors such as server load, server health, geographic location of the client, etc.

- Database Scaling:

- Consider splitting databases to distribute the load. Sharding, where data is horizontally partitioned across multiple databases, can be effective.

- Using NoSQL databases can also be beneficial as many are designed for easy horizontal scaling (e.g., Cassandra, MongoDB).

- Caching Mechanisms:

- Implement caching to reduce the load on the database. Caches can store frequently accessed data in memory for quick retrieval.

- Distributed cache systems like Redis or Memcached can scale horizontally and serve as a shared cache for multiple application servers.

- Queuing Systems for Asynchronous Processing:

- Use message queues like RabbitMQ or Kafka for asynchronous processing. This helps in decoupling components and managing the load effectively.

- It allows parts of your application to process information independently at their own pace.

- Containerization and Orchestration:

- Containers (like Docker) encapsulate applications and their environments. This makes it easy to deploy and scale applications across different servers.

- Container orchestration tools like Kubernetes help manage and scale a large number of containers efficiently.

- Cloud-native Services:

- Utilizing cloud-native services (like AWS Lambda, Azure Functions) which are inherently designed to scale horizontally can also be beneficial.

- These services automatically scale based on the load and you pay only for the resources you use.

- CDN for Static Content:

- Use a Content Delivery Network (CDN) to serve static content. This reduces the load on the servers and also improves the response time for users geographically distant from the server.

The choice of architecture often depends on the specific requirements of the application, such as the need for real-time processing, data consistency requirements, budget constraints, and the existing technological stack.