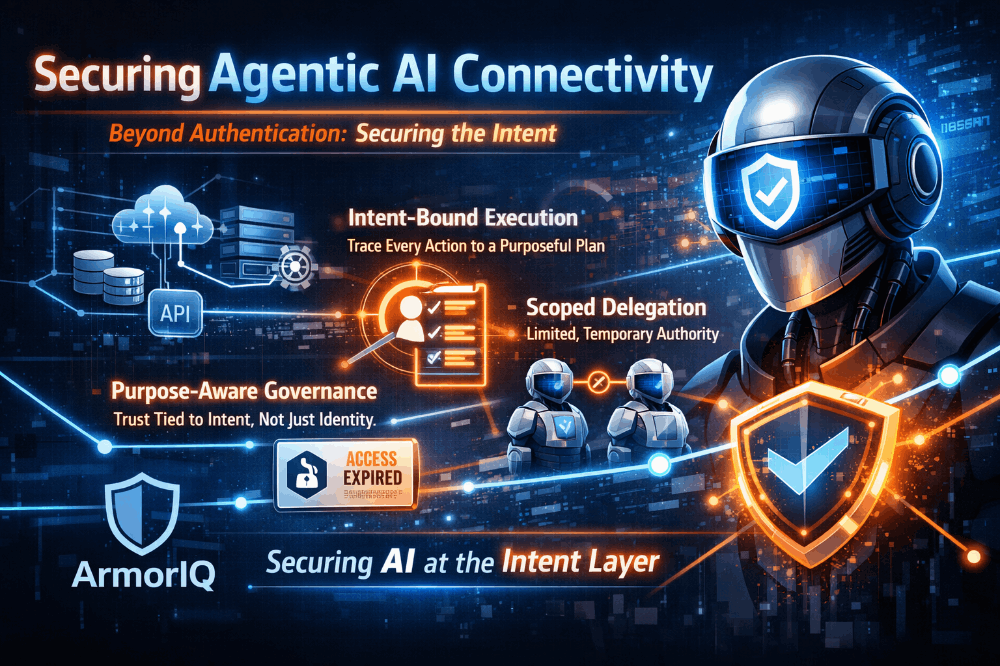

Securing Agentic AI Connectivity

And here’s the problem nobody has solved: identity and access controls tell you WHO is acting, but not WHY.

An AI agent can be fully authenticated, fully authorized, and still be completely misaligned with the intent that justified its access. That’s not a failure of your tools. That’s a gap in the entire security model.

This is the problem ArmorIQ was built to solve.

ArmorIQ secures agentic AI at the intent layer, where it actually matters:

- Intent-Bound Execution: Every agent action must trace back to an explicit, bounded plan. If the reasoning drifts, trust is revoked in real time.

- Scoped Delegation Controls: When agents delegate to other agents or invoke tools via MCPs and APIs, authority is constrained and temporary. No inherited trust. No implicit permissions.

- Purpose-Aware Governance: Access isn’t just granted and forgotten. It expires when intent expires. Trust is situational, not permanent.

I’ve spent years advising on security strategy and watching the same pattern repeat: controls pass, but outcomes fail because nobody was governing purpose. ArmorIQ is addressing that gap head-on, and that’s why I’ve joined as an advisor.

If you’re a CISO, security architect, or board leader navigating agentic AI risk — this is worth your attention.

See what ArmorIQ is building: https://armoriq.io