Introducing Ligertron 1.0: Paving the Way for AI Verification and Ownership at Scale

Introducing Ligertron 1.0: Paving the Way for AI Verification and Ownership at Scale

NIM Network

We’re excited to present the initial component of the Nim AI framework, which offers economic ownership and verified access to AI models. Implementing AI computations on the blockchain is challenging due to high computational costs. Many opt for off-chain computations, which, is easier to implement, but require different trust assumptions.zkML or ZK for AI inferences provides an solution, using zero-knowledge proofs (ZKPs) to protect confidential data and model parameters. This is vastly important, especially for the monetization of independent open source AI. However, zkML is not feasible for large-scale AI applications due to high costs and hardware requirements.Ligetron offers the most efficient ZK solution for AI verification, prioritizing cost-efficiency. We predict that ZK will be the primary factor influencing cost and overhead in every integrated crypto system for AI verification.

In a short period of 3 weeks, we created the world’s first proof for the Llama 2 7b model, one of the most popular open-source models. Ligetron 1.0 achieved a 1024x improvement in memory and a 66x improvement in speed performance compared to the previous best attempt by Modulus. The Ligero benchmark for complex AI computation has a 20,000x overhead. We estimate that mid-term optimizations could bring it down to as low as 20x.

The ZK space offered two pathways: zkVM for general-purpose languages and zkML for heavy computational loads. Integrating the strengths of both seemed an impossible feat — until now. Ligertron provides a general-purpose zkVM that can run complex AI models and is also competitive in speed with much more resource-intensive zkVMs.

Ligetron 1.0 provides unique features:

- It has a memory-efficient ZK system that allows Ligero to take advantage of advanced prover hardware as well as run efficiently on low-costcommodity hardware.Thanks to its memory efficiency, Ligetron allows developers to program zero-knowledge applications in high-level languages such as C, C++, or Rust, even from their browser. You can try it out here.

- It is particularly powerful under heavy loads such as AI inference, delivering high performance at a low cost.

- Its general-purpose design results in low integration costs for running different AI models, a prerequisite for the ZK system to keep pace with new AI innovations.

Ligetron 1.0

Ligetron 1.0 is a memory-efficient ZK system based on the Ligero Proofs, Lightweight Sublinear Arguments Without a Trusted Setup’. Created by the co-authors of Ligero, Ligetron 1.0 ZKSNARK follows the Ligero blueprint for generating a ZKSNARK. Its main distinction is the memory-efficient design, which generates the constraints (linear and quadratic) on-the-fly from a WASM program.

Ligetron stands out as the first and only hash-based ZKSNARK that preserves memory efficiency, building on the strengths of the Ligero ZKSNARK proof system first presented at ACM CCS 2017. This system is a modern implementation of the MPC-in-the-head paradigm pioneered by Ishai, Kushilevitz, Ostrovsky, and Sahai back in 2007.Ligetron’s versatile toolchain cross-compiles high-level languages to WASM, adapting any WASI-compatible language for use with Ligetron. The key is memory efficiency with built-in witness sharding (i.e., sharding without recursive composition). Ligetron’s memory is constant times the memory required by the underlying WASM program.Ligero allows developers to program zero-knowledge applications in high-level languages such as C, C++, or Rust directly from their browsers, thanks to its memory-efficient design, visit ligetron.com to test it out.

Lack of a Solution for AI Verification at Scale in the ZK Space

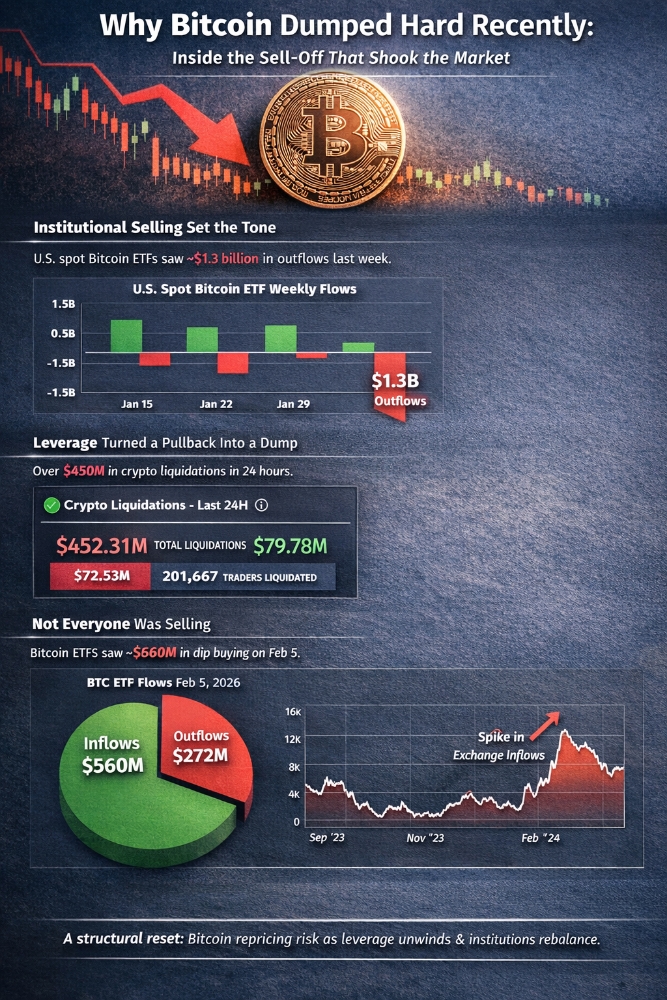

If a STARK system monopolized all computational usage of AWS EC2 globally in 2023, its capacity would still be under 10 inferences per second

To understand the challenges of deploying the current state-of-the-art cryptographic proof systems at scale for the inference of complex AI models such as LLMs, a good starting point is to consider the resource pull for frameworks such as Stark’s, and the global computational load on some of the leading cloud providers. Specifically, AWS EC2, with a leading market share of 31% in Q4 2023, can be used as a proxy for the current scale of cloud operations globally. Based on the company’s published revenue and pricing information, and a breakdown by vertical — compute, storage, network, etc., the average load in 2023 appears to be in the realm of 122 million vCPUs.

Analyzing current benchmarks for Stark’s framework, the system appears to require 12.8 million vCPUs to maintain a throughput of 1 inference per second. If Stark were to monopolize all computational usage of AWS EC2 globally in 2023, its capacity would still be under 10 inferences per second, well below the scale towards which AI & LLM inferences are expected to grow as adoption increases globally. It would also require a whopping 476 PB of memory, almost fifteen times that of the largest supercomputer, the Fugaku, which currently stands at 32 PB.

Comparison with STARK

Ligetron is 300 times cheaper for the same speed, even before any further optimizations.

Starks are among the most competitive zero-knowledge provers in production and are used to scale Ethereum via rollups. They showcase 1,000–2,000 TPS by employing around 200 CPUs with roughly 0.5 TB of RAM. They achieve this through continuations and recursive composition. Based on an estimated 1–2 million transactions that are rolled up in 15–30 minutes, it is conceivable that the computation size proved in a single stark proof is equivalent to a single inference of the Llama 7B model as in our benchmark. However, the expensive hardware required to generate such a proof would put the cost of proving this inference at $300. Our current benchmark was run on an r7i.4xlarge, which would cost less than $15 for 14 hours. In an independent benchmark, Ligetron achieved around 500 TPS throughput for the rollup circuit when running on a single g5.xlarge. The rollup circuit is a structured computation, whereas our benchmark assumed the computation to be an unstructured WASM computation.

A conservative estimate suggests that a maximum of 4 g5.xlarge instances would be required to complete the proof of a single inference in less than 15 minutes, and this would cost no more than one dollar, when moving from the unstructured to the structured variant.

Methodology of Our Benchmark Testing

Ligetron 1.0 achieved the best benchmark for model proving using a baseline version that required only three weeks of preparation with no special gadgets or custom circuits. By simply compiling Llama2.c into WASM and running it on commodity hardware, an r7i.4xlarge (8 cores) was used, with a peak memory usage of 10GB.

Our benchmark used llama2.c (a Llama-2 inference engine written in pure C) to generate one token using the quantized Llama2 model. The executed WASM code had 440 million floating point (fp32) instructions and 3 billion integer (int32/int64) instructions. When compiled, it generated 128 billion linear constraints and 80 billion quadratic constraints over a 100-bit ring (product of two 50-bit primes). It took approximately 14 hours (50,000 seconds) to run the prover and about 6.5 hours (22,000 seconds) to run the verification. The proof length and peak memory of the prover were about 10 GB.The C code was primarily adjusted to convert some of the 64-bit floating point operations to 32-bit floating point operations, and WASM was modified to circumvent the 4GB memory limitation to run the quantized Llama 7b model.

Comparing Ligetron for AI with Similar Attempts

We ran the Llama 7B model on an r7i.4xlarge (8 cores) with a peak memory usage of 10GB in 14 hours. In contrast, Modulus Labs ran the GPT-XL 1.5B model on a 128-core, 1TB RAM (with a 10TB disk) setup in over 200 hours. Consequently, we achieved a 14x speedup on a 4.6x larger model, using 4x fewer cores and leaving a 100x smaller memory/file footprint.

We are making comparisons based on the data provided by Modulus here.A notable example is EZKL’s attempt to incorporate nanoGPT into Halo2-KZG circuits using EZKL. You can read more about it on their blog.Using an AMD EPYC 7451 24-Core Processor with 256 GB RAM, it took approximately 2000 seconds to run a model with 1 million parameters. On the other hand, Ligero took around 50,000 seconds to run a model that was 7000 times larger. This indicates a 280 times better performance from EZKL, despite lower hardware requirements.

Looking Ahead: A Thousandfold Improvement

Currently, Ligetron has up to 20,000x overhead, and we estimate that a 1,000x improvement is feasible, resulting in a remarkable 20x overhead for AI computation. This will be higher than the 180x overhead recently claimed by Modulus Labs for the GKR prover, while providing a general solution with a low integration cost for different types of AI models.

In the coming months, we plan to extensively optimize Ligetron for AI verification. Our roadmap anticipates an impressive 1,000x improvement in Ligetron’s capabilities. This includes a 10x increase through the integration of the lookup arguments and an additional 10x increase through the use of GPU power. We also expect a further 10x improvement by utilizing the fact that matrix multiplication is a highly structured circuit. As demonstrated in the benchmarks in the paper published by the Ligero team, there’s a 10x speedup between random and structured circuits (referred to as batched and non-batched in the paper). This could result in a 1,000x speedup. After these optimizations, we expect another leap in performance from the Ligero team’s latest research, which optimizes the handling of operations in zkVMs. This research was honored with the Distinguished Paper Award at ACM CCS 2023.

Bringing Ligero Proofs to the Chain

It’s important to note that Ligero proofs currently cannot be verified on-chain. To bring Ligero proofs on-chain, a couple of extensions are required. First, a switch to the pre-processing variant of Ligero is needed. Second, Ligero proofs need to be optimized to compose with a fully succinct SNARK such as Groth16/Sonic. Estimates indicate that this composition could potentially double the total time.

Ligero proof lengths scale square root in the size of the computation while STARKs scale polylogarithmically, however, in practice Ligero proof lengths are smaller than STARKs for medium scale computations and remains competitive for large computations (See misconceptions-about-snark) Since, we will compose with a regular SNARK the verification circuit size will be more important than the proof length which is what we plan to optimize.

Comparison with zkVMs

We’ve seen two major development pathways in the ZK space: zkVM and zkML. The former focuses on general-purpose languages, while the latter optimizes for massive computational loads. Integrating the strengths of both seemed an impossible feat — until Ligetron. With this new zkWASM technology, Ligetron effortlessly handles massive AI computations, promising a new era of efficiency and speed.

No specialized circuits or gadgets are needed to run our benchmark. Ligero can prove any WASM program (with oblivious control flow). The benchmark required incorporating floating point operations (via a naive implementation) and took about 3 weeks to develop. We compiled the Llama2.c program to WASM and benchmarked Ligero’s performance. The table below shows that Ligetron 1.0 is competitive as a zkVM while handling very large programs on commodity hardware.

The next steps for Nim involve moving swiftly towards actual use-cases and applications that leverage the ability to verify AI computations. This will contribute to the creation of a dynamic network connecting model creators, applications, owners, and users. Our efforts will be focused on enhancing the functionality and efficiency of the Ligetron system, and further exploring its potential applications in gaming and consumer applications.