The Future of Language Models: How a 1 Million Token Memory Could Revolutionize AI

As AI technology evolves, language models are becoming more advanced and sophisticated. GPT-3, for example, is capable of processing massive amounts of data and generating highly accurate text. However, imagine if a language model could remember entire conversations or texts, rather than just a few sentences or paragraphs.

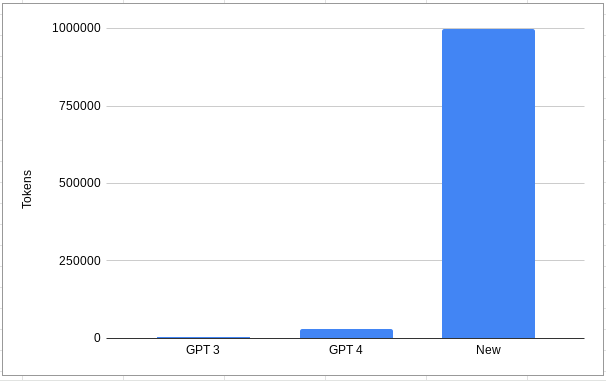

A recent paper explored the potential of models that can remember 1 million tokens, compared to ChatGPT 3.5’s 4096 tokens and ChatGPT 4’s 32k tokens. Paper discusses the use of recurrent memory to extend the context length of BERT, one of the most effective Transformer-based models in natural language processing. With the ability to remember 1 million tokens, the possibilities for developing innovative applications using this technology are vast and will certainly change the landscape of AI applications. In this blog post, we will explore some unique applications of this groundbreaking technology.

A language model that can remember 1 million tokens could be a game-changer in the world of AI. With this capability, such a model could provide personalized recommendations, assist in medical diagnosis, aid in legal research, and even provide mental health counseling.

Why token context matter

The number of tokens in a language model’s memory and conversation history is crucial for maintaining context during a conversation. It is similar to having a chat with a friend who can remember the last few minutes of your chat. However, the language model’s limited memory can result in the need to repeat important information to maintain context. The context window of the language model starts from the current prompt and goes back in history until the token count is exceeded. If the conversation length exceeds the token limit, the context window shifts, potentially losing important content from earlier in the conversation.

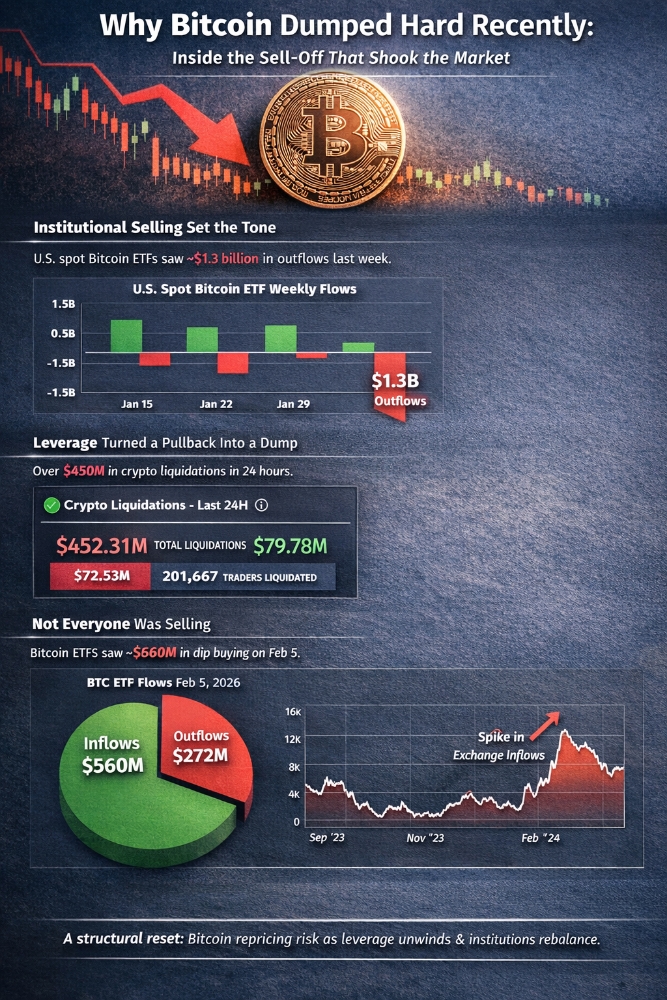

According to OpenAI, 100 tokens is roughly equivalent to 75 words. With 4096 tokens (for GPT-3.5) and 32000 tokens (for GPT-4), the corresponding word limits are 3072 and 24000 words respectively. Assuming approximately 500 words per single-spaced page, a text containing 6.1 pages would have 3072 words, which is the token limit for GPT-3.5. Similarly, for GPT-4, the limit would be 48 pages. This means that GPT-3.5 and GPT-4 can remember the context for the past 6 and 48 pages of content, respectively. A language model with 1 million tokens would correspond to approximately 1500 pages of memory, which is equivalent to reading 20 novels or 1000 legal case briefs or even reading through many patients’ entire health histories while retaining all the information. Using such a large memory capacity can have numerous benefits for a variety of applications. Image created using Google Sheet

Image created using Google Sheet

Let’s explore some of these potential applications in more detail.

1: Personalized Recommendations

A language model capable of remembering 1 million tokens can analyze users’ past interactions and preferences to provide highly tailored recommendations for products, services, or entertainment. This level of personalization could transform the way we discover new things and help us find the perfect product or service to suit our needs.Image created using AI

2: Medical Diagnosis Assistance

A language model with 1 million-token memory can analyze entire patient history data and symptoms, assisting doctors in identifying potential conditions or diseases. This could lead to faster and more accurate diagnoses, ultimately improving patient care and outcomes.Image created using AI

3: Legal Research Aid

By analyzing huge number of previous case law and identifying relevant precedents, a language model with the capacity to remember 1 million tokens can help lawyers build stronger cases and achieve better outcomes for their clients. This powerful tool could streamline the legal research process and enhance the practice of law.Image created using AI

4: Mental Health Counseling

A language model capable of remembering 1 million tokens can analyze previous all the previous conversations and interactions to provide personalized support and guidance to users. This could revolutionize mental health counseling by offering customized assistance, helping individuals overcome challenges and achieve their goals.Image created using AI

5: Creative writing

A language model that can remember 1 million tokens could be used to assist in creative writing projects, such as writing novels or screenplays. By remembering previous dialogue and character interactions, the model could help writers maintain consistency in their storytelling and character development.Image created using AI

These are just a few examples of the potential applications of a language model that can remember 1 million tokens. The possibilities are endless, and such a model could revolutionize the way we interact with and utilize AI technology in various industries.

Conclusion:

The potential applications of a language model that can remember 1 million tokens are vast and transformative. However, it’s essential to address potential risks and challenges, such as privacy and data security, while ensuring safeguards are in place to prevent misuse of the technology.

Despite these challenges, the immense promise of a language model with 1 million-token memory capacity is undeniable. From personalized recommendations to improved medical diagnoses and better legal outcomes, the future of AI technology is undoubtedly exciting as we continue to unlock its potential.

Great