Discover the Power of LangGraph: My Adventure in Building GPT-Newspaper

A few months ago, I embarked on a thrilling journey to create my second significant open-source project: GPT-Newspaper. As it rippled through the AI community, I found myself reflecting on my guiding principle for building AI agents — emulating human methods to solve tasks.

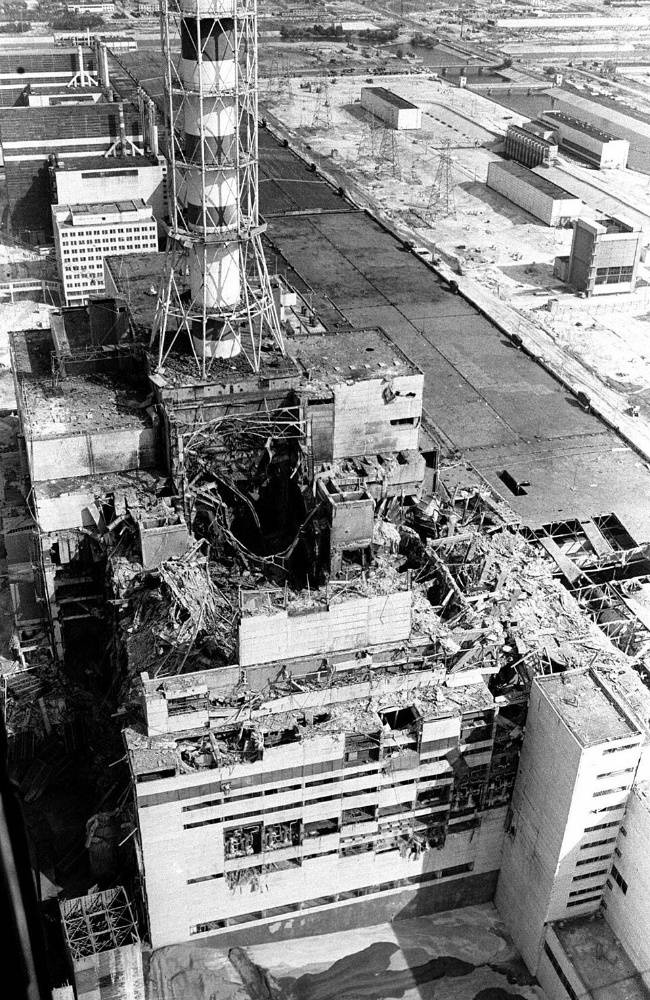

The Birth of an AI-Powered Publishing House

The concept behind GPT-Newspaper was both simple and mighty: enhancing large language models’ capabilities by assembling a team of AI agents, each contributing towards a shared aim.

Picture a traditional publishing house, where each role is vital and every responsibility crucial. In a similar vein, every agent in my model played their part, leading to a final product that was the fruit of their collective efforts.

Building the GPT Newspaper: Meet the Specialized Sub-Agents

The GPT Newspaper hinges on seven specialized sub-agents, each an essential cog in the project:

- The Search Agent: Our scout, scouring the internet for the latest and most pertinent news.

- The Curator Agent: The discerning connoisseur, filtering and selecting news based on the user’s preferences and interests.

- The Writer Agent: The team’s wordsmith, crafting engaging, reader-friendly articles.

- The Critique Agent: The provider of constructive feedback, ensuring the article’s quality before it gets the green light.

- The Designer Agent: Our artist, arranging and designing the articles for a visually delightful reading experience.

- The Editor Agent: The conductor, constructing the newspaper based on the crafted articles.

- The Publisher Agent: The final touch, publishing the finished product to the frontend or the desired service.

Each agent plays an essential role, culminating in a unique and personalized newspaper experience for the reader.High Level Overview of GPT-Newspaper’s Architecture

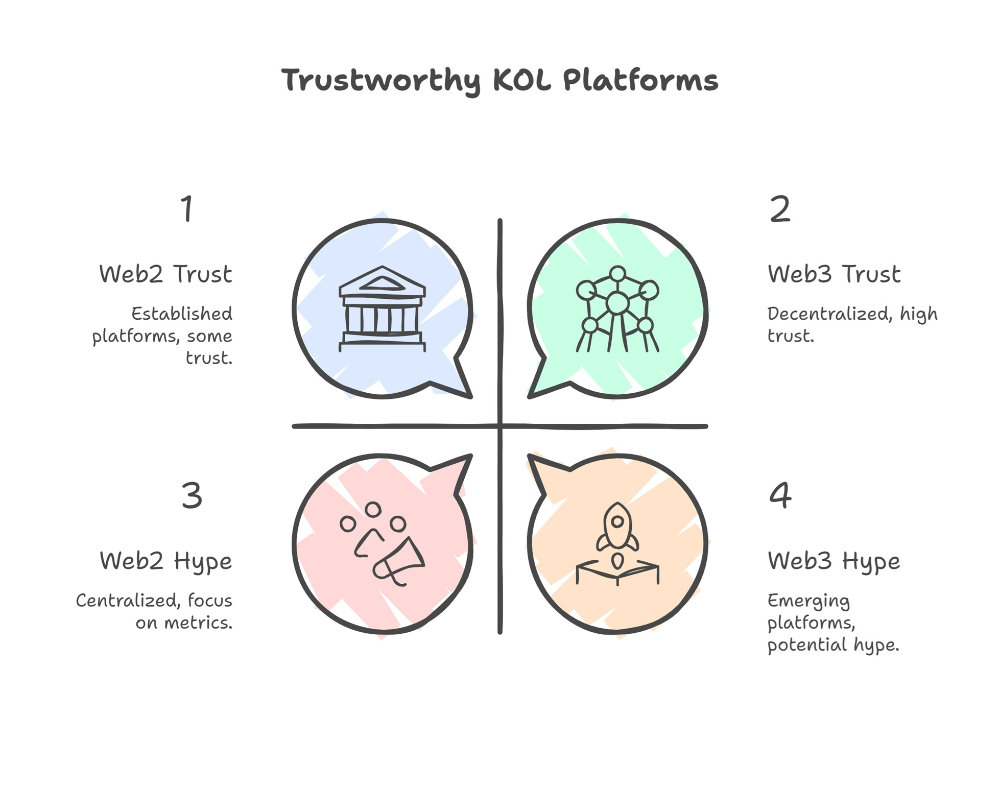

LangGraph: A Game-Changer for Cyclic Operations in LLM Applications

LangGraph, a cornerstone of the innovative toolkit by LangChain, is a specialized library designed for the development of stateful, multi-actor applications using LLM. It extends the LangChain Expression Language, empowering it to synchronize the actions of multiple actors across different computational steps. This synchronization is cyclical, drawing inspiration from systems like Pregel and Apache Beam.

For me, the beauty of LangGraph lies in its ability to integrate cycles into your LLM applications. This contrasts with other platforms that only optimize for Directed Acyclic Graph (DAG) workflows. LangGraph dares to break the mold. If your sole aim is to build a DAG, the LangChain Expression Language alone would suffice.

The introduction of cycles plays a crucial part in fostering agent-like behaviors. In this setting, an LLM is invoked in a loop, with each iteration prompting the LLM to determine the next action. This creates a dynamic and interactive event sequence, highlighting LangGraph’s potential in crafting intricate multi-agent systems like GPT-Newspaper.

Look at the simplicity of setting up our agents using LangGraph:

# Import agent classes

from .agents import SearchAgent, CuratorAgent, WriterAgent, DesignerAgent, EditorAgent, PublisherAgent, CritiqueAgent

class MasterAgent:

def __init__(self):

self.output_dir = f"outputs/run_{int(time.time())}"

os.makedirs(self.output_dir, exist_ok=True)

def run(self, queries: list, layout: str):

# Initialize agents

search_agent = SearchAgent()

curator_agent = CuratorAgent()

writer_agent = WriterAgent()

critique_agent = CritiqueAgent()

designer_agent = DesignerAgent(self.output_dir)

editor_agent = EditorAgent(layout)

publisher_agent = PublisherAgent(self.output_dir)

# Define a Langchain graph

workflow = Graph()

# Add nodes for each agent

workflow.add_node("search", search_agent.run)

workflow.add_node("curate", curator_agent.run)

workflow.add_node("write", writer_agent.run)

workflow.add_node("critique", critique_agent.run)

workflow.add_node("design", designer_agent.run)

# Set up edges

workflow.add_edge('search', 'curate')

workflow.add_edge('curate', 'write')

workflow.add_edge('write', 'critique')

workflow.add_conditional_edges(start_key='critique',

condition=lambda x: "accept" if x['critique'] is None else "revise",

conditional_edge_mapping={"accept": "design", "revise": "write"})

# set up start and end nodes

workflow.set_entry_point("search")

workflow.set_finish_point("design")

# compile the graph

chain = workflow.compile()

# Execute the graph for each query in parallel

with ThreadPoolExecutor() as executor:

articles = list(executor.map(lambda q: chain.invoke({"query": q}), queries))

# Compile the final newspaper

newspaper_html = editor_agent.run(articles)

newspaper_path = publisher_agent.run(newspaper_html)

return newspaper_pathThe Power of Cycles in Action within GPT-Newspaper

Within GPT-Newspaper, the cycle between the writer and critique agent exemplifies a repeated process of article creation and review. Initially, the Writer Agent crafts an article, which the Critique Agent then reviews. If there are areas that need improvement, the Critique Agent provides valuable feedback, encouraging the Writer Agent to refine the article. This iterative cycle continues until the Critique Agent gives the nod of approval, promising a dynamic and interactive sequence of events that enhances the final output quality.

Getting Started with GPT-Newspaper

In this section, we will walk you through the steps to download and start using GPT-Newspaper.

Prerequisites

Before you begin, you will need the following API keys:

Installation

- Clone the repository:

git clone <https://github.com/rotemweiss57/gpt-newspaper.git>

- Export your API Keys:

export TAVILY_API_KEY=<YOUR_TAVILY_API_KEY> export OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>

- Install Requirements:

pip install -r requirements.txt

Running the App

- Run the app:

python app.py

- Open the app in your browser by navigating to the following URL:

<http://localhost:5000/>

That’s it! You have now successfully set up and started GPT-Newspaper on your local machine.

Looking Ahead: The Future of GPT-Newspaper and LangGraph

As I refine and expand GPT-Newspaper, I’m enthusiastic about LangGraph’s potential. Its capacity to handle cyclic operations and progressively improve outputs could revolutionize other applications, extending the limits of what language models can accomplish. Agents can communicate!

In my view, LangGraph is laying the groundwork for a new era of AI. This era will see complex tasks being addressed by a coordinated ensemble of specialized agents. The exciting journey of GPT-Newspaper is merely a hint of what’s to come, and I’m eager to delve into the future possibilities.