AI used not just for good

Good morning/evening

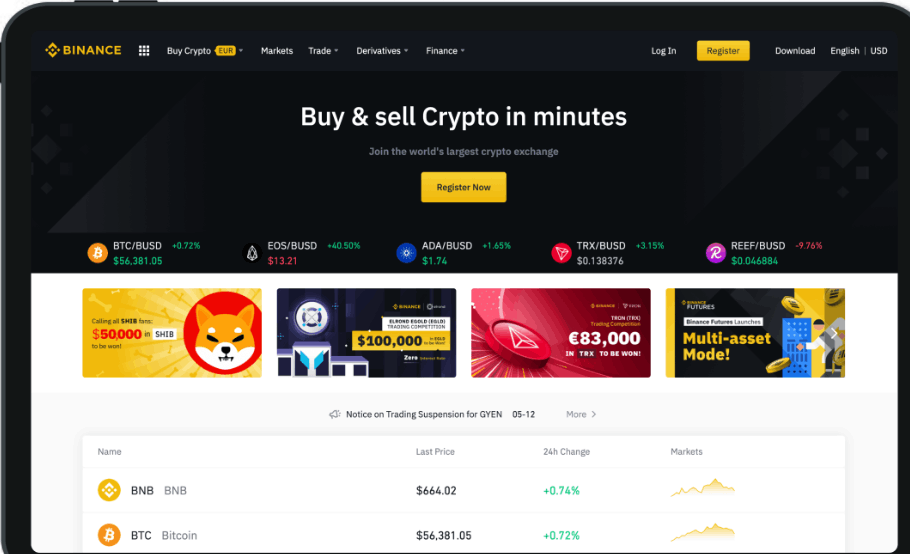

For years, crypto repeated the same mantra like a spell, not your keys, not your coins. It was simple self custody was sold as freedom. In reality, it became a high stakes puzzle where one typo, one blind signature, or one moment of panic could wipe out years of savings. Still, the community doubled down. Responsibility was the price of sovereignty. If you failed, that was the lesson.

Then AI showed up and suddenly self custody isn’t just about discipline anymore.

Because while AI has the potential to make wallets safer, clearer, and more human friendly, it’s also turning scams into something far more dangerous than they’ve ever been and that is more convincing. Remember the Micheal Saylor ads that used to interrupt you on YouTube, they were out of sync and quite obvious, well those sort of scams are getting better now with the help of AI and we need to get better at spotting the scams.

Human Error

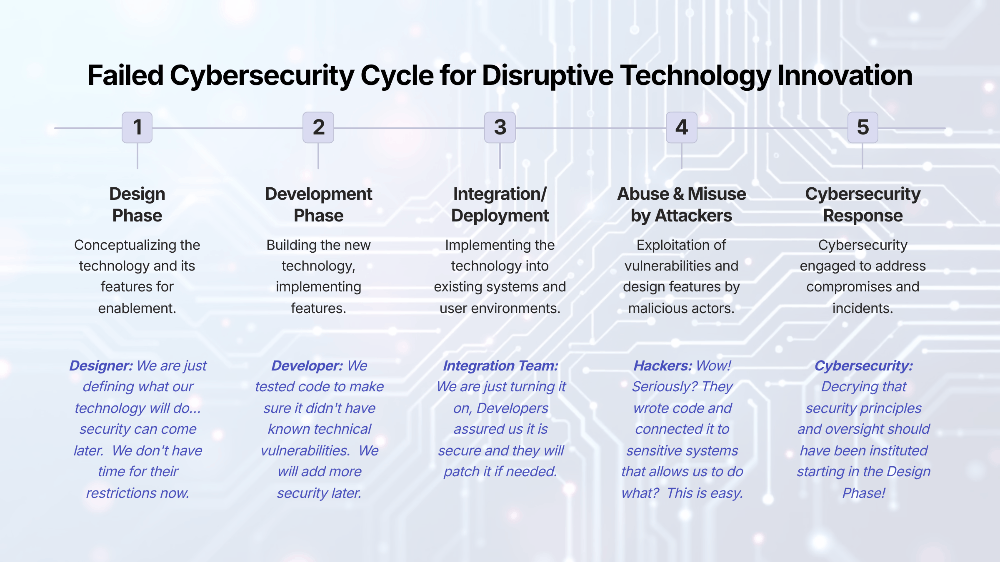

Before AI, most crypto losses came down to very human mistakes.

- Sending funds to the wrong address

- Signing transactions you didn’t fully understand

- Falling for low effort phishing

- Losing seed phrases forever

- Clicking on a bad link or advert

The risks were obvious, even if the consequences were brutal. Some scams looked like scams and some of the fake links were sometimes sloppy. Messages looked like they were written by a three year old, with spelling mistakes and bad punctuation. You could at least tell yourself it was so bad I would never fall for that even though even the most diligent person can sometimes have a moment of absentmindedness, you are tired, you didn't check the whole wallet address or that seed phrase you scribbled down in a hurry on a scrap of paper, are all things that are easily done.

The personal angle

The most dangerous thing about AI in crypto isn’t that it’s smart, now it’s getting personal.

Modern AI-driven scams aren’t blasting the same message to a million wallets. They’re watching behavior. They know when you interact with a new protocol, bridge funds, mint an NFT, or panic during volatility. They can time an attack precisely when you’re most vulnerable.

- You interact with a new DeFi app

- Minutes later, you receive a message from “support”

- The language matches the project’s tone perfectly

- The instructions are clear, calm, and urgent

- The solution requires 'just one signature'

Nothing feels off, AI-generated phishing messages no longer contain red flags. They contain empathy or context and even confidence. And unlike human scammers, they never get tired, never miss timing, and never stop iterating. The scams look more legitimate, with better language. For people that have been in crypto for a long time, we are quite aware that there is 'no support' but just imagine you are new to the space and you think 'support' is helping you!

AI, a tool, a threat or both

Don't get me wrong, I understand that AI is going to be huge and will do some good, I do not use AI to its full potential at all. I use it for thumbnails, I have put a complex white paper into an AI and get a more simple, easy to understand version but it can be used for so much more than that. There are AI agents that can trade for you, I have used a bot but have not used an AI agent as such but I did take a small punt on an AI Agent token that is spectacularly bad and down 99% lol. I could also rant about the number of dull and uninteresting copy pasted AI articles that are around now, some may argue that they are better than mine, but each to their own I guess. But there is also a bad side to AI and that is where we will have to be so much more careful.

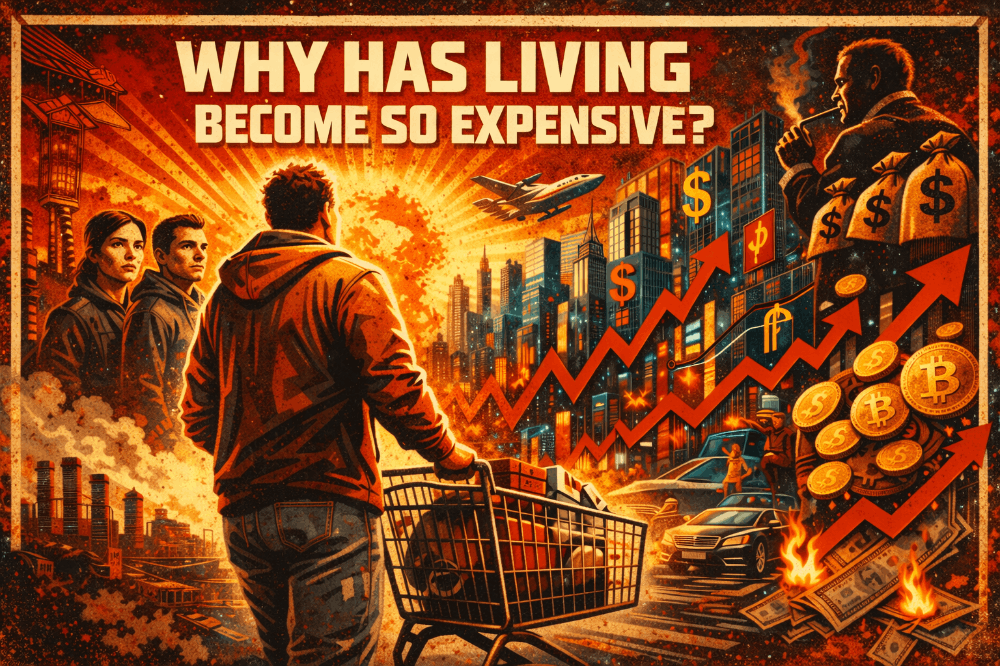

AI will be replacing jobs, that is an undeniable fact now, you don't need an audio typist or an assistant to write your letters and emails, you won't really need writers, yes AI may not be the best at the moment, but it will only get better and at a faster rate than us! Some newsletters are already being collated and sent out by AI, with one YouTuber saying he had not had to do anything on his weekly newsletter for months because AI was doing it for him! The list of jobs AI will do is huge, they will do it faster, more efficiently and without needing a lunch break!

To conclude

In my possibly old fashioned view, AI isn’t about replacing human judgment, it’s about supporting it where us humans fail. People are bad at making decisions under pressure, interpreting complex technical prompts, and spotting subtle risks when emotions are involved. AI, used well, can fill those gaps. It adds context and can slow people down at the right moments, it can explain consequences before mistakes become irreversible. In a space like crypto, where one wrong click can mean permanent loss, that support could be really helpful.

But the same qualities that make AI useful also make it dangerous when misused. Automation scales mistakes just as efficiently as it scales safety. A poorly designed AI doesn’t just fail once, it can fail everywhere, instantly, and often invisibly. AI can make systems safer, mistakes rarer, and advanced tools accessible to normal people, or it can amplify risk faster than any human ever could. The outcome depends less on the technology itself and more on where we choose to put the final decision with machines, or with humans who understand their limits.

One thing is for sure AI can be used for good and bad, but we will definitely need to be more cautious in the crypto space. What are your thoughts? Do you think AI is just a great tool or do you think we need to be worried? Will the good outweigh the bad in regards to scams? Have you used AI to save you from a scam or have you fallen for an AI scam?

As always, thank you for reading and please feel free to comment.

![[Honest Review] The 2026 Faucet Redlist: Why I'm Blacklisting Cointiply & Where I’m Moving My BCH](https://cdn.bulbapp.io/frontend/images/4b90c949-f023-424f-9331-42c28b565ab0/1)