The Ethics of AI: Steering Innovation Towards a Responsible Future

The Ethics of AI: Steering Innovation Towards a Responsible Future

AI technology is widely used throughout industry, government, and science. Some high-profile applications include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); interacting via human speech (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go).[2] However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."[3][4]

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems, as opposed to the natural intelligence of living beings. It is a field of research in computer science that develops and studies methods and software which enable machines to perceive their environment and uses learning and intelligence to take actions that maximize their chances of achieving defined goals.[1] Such machines may be called AIs.

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems, as opposed to the natural intelligence of living beings. It is a field of research in computer science that develops and studies methods and software which enable machines to perceive their environment and uses learning and intelligence to take actions that maximize their chances of achieving defined goals.[1] Such machines may be called AIs.

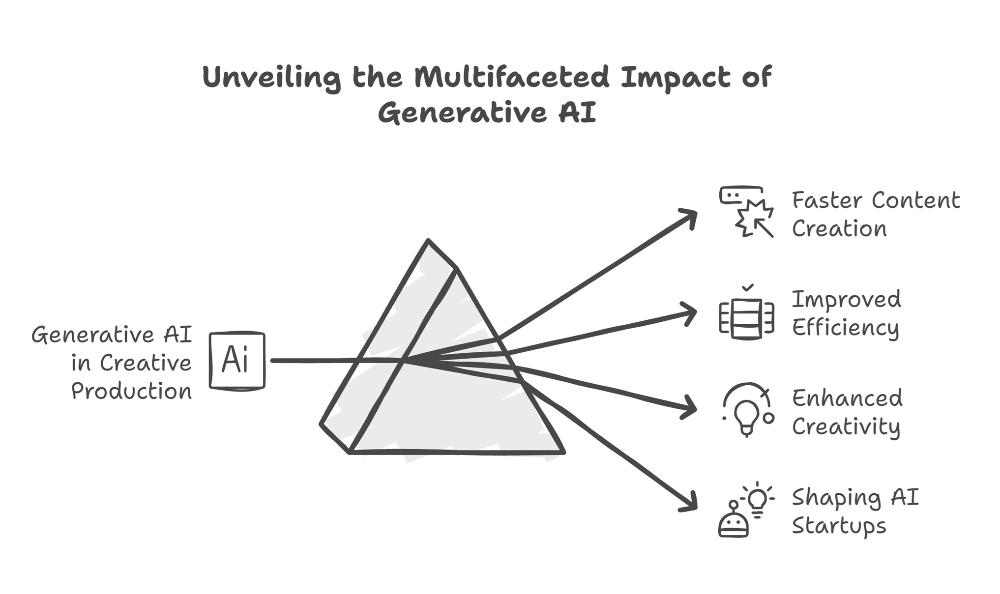

AI technology is widely used throughout industry, government, and science. Some high-profile applications include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); interacting via human speech (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go).[2] However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."[3][4]

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems, as opposed to the natural intelligence of living beings. It is a field of research in computer science that develops and studies methods and software which enable machines to perceive their environment and uses learning and intelligence to take actions that maximize their chances of achieving defined goals.[1] Such machines may be called AIs.

AI technology is widely used throughout industry, government, and science. Some high-profile applications include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); interacting via human speech (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., ChatGPT and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go).[2] However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."[3][4]

Artificial intelligence (AI) is rapidly transforming our world, bringing with it immense potential for progress in healthcare, transportation, and countless other fields. However, alongside this potential lies a responsibility to ensure AI is developed and used ethically. Biases, lack of transparency, and potential job displacement are just a few of the concerns that necessitate careful consideration.

One of the primary challenges with AI is bias. AI systems learn from data, and if that data is biased, the resulting algorithms can perpetuate discrimination. Imagine a loan approval system trained on historical data that favored certain demographics. This could lead to unfair loan denials for qualified individuals. Mitigating bias requires diverse datasets and ongoing monitoring to identify and address discriminatory outcomes.

Another concern is the lack of transparency in complex AI algorithms. Often, it's difficult to understand how an AI system arrives at a decision. This "black box" effect can erode trust and make it challenging to identify and rectify errors. Explainable AI (XAI) is an emerging field that aims to address this by developing algorithms that can provide clear explanations for their reasoning.

The potential impact of AI on employment is also a significant ethical consideration. While AI may create new jobs, it could also automate many existing ones, leading to unemployment and economic disruption. We need to prepare for a future where humans and AI work together, with policies that support retraining and reskilling the workforce.

So, how can we ensure responsible AI development and use? Here are some key strategies:

- Developing Ethical Guidelines: Creating clear ethical frameworks that prioritize fairness, transparency, and accountability is crucial. These guidelines should be established by a collaborative effort involving engineers, policymakers, and ethicists.

- Building Diverse Teams: Encouraging diversity in AI development teams is essential to identify and mitigate potential biases. Teams with a variety of backgrounds and perspectives can create more robust and inclusive AI systems.

- Promoting Openness and Transparency: Researchers and developers should strive to make AI algorithms more transparent, allowing for scrutiny and ensuring they are aligned with ethical principles.

- Prioritizing Human Oversight: AI should not replace human judgment, especially in critical decision-making processes. Humans should maintain ultimate control and accountability for AI systems.

By taking a proactive approach to AI ethics, we can harness its potential for good while mitigating its risks. Responsible AI development requires ongoing vigilance, collaboration, and a commitment to ensuring that this powerful technology serves humanity as a whole.