Generative AI: 12 Keywords You Need to Know Going Into 2024

Generative AI: 12 Keywords You Need to Know Going Into 2024

Generated Using the Theme Explorer V1

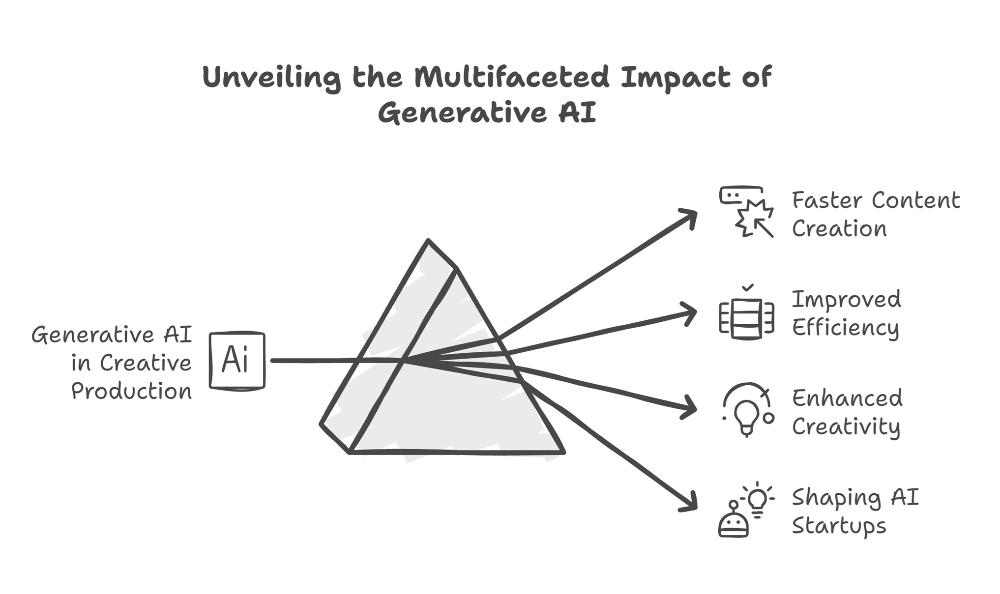

Generative AI is one of the most exciting and innovative fields of artificial intelligence, with applications ranging from art and music to medicine and engineering. But what exactly is generative AI, and what are some of the key terms and concepts you need to know to understand it better? In this article, we will explain 12 of the most common and important keywords related to generative AI and its recent developments.

Why this is Necessary?

The main reason for creating this glossary is that many people need to know what exactly is being improved in some these most popular LLMs such as ChatGPT.

OpenAI’s “DevDay” consisted of announcements of several new improvements to their chatbots like even bigger context windows but many may not even know what a context window is in the first place!

With adoption of generative AI into the workplace a pending operation, and many businesses already looking to incorporate it into their services, it is important to know at least few key terms so you can stay on your toes.

OpenAI’s “Dev” Announcements

Keywords

The keywords start at the foundations and then build up in difficulty so enjoy!

Note: This list is not comprehensive.

1. Generative AI

Generative AI refers to a type of artificial intelligence that creates new, original content, such as text, images, or music, based on patterns and examples it has learned from existing data.

2. LLM

LLM commonly stands for “Large Language Model.” It refers to advanced AI models, like GPT-3, designed to understand and generate human-like text on a large scale, enabling a broad range of natural language processing tasks.

They are trained on large datasets of text which allow them to predict the next word in a sentence. By learning from a large and diverse corpus of text, these models can capture general linguistic patterns and common sense knowledge, but they may also inherit biases and errors from the data.

Examples include:

- GPT-3 and GPT-4 by OpenAI (Most Popular)

- Llama 1 and 2 by Meta (Open source)

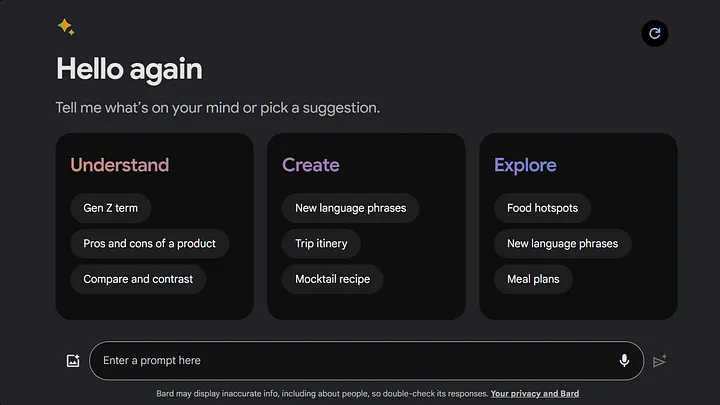

- Bard by Google

Snapshot of Google “Bard”

3. Chatbot

A chatbot is a type of computer program, often employing generative AI or large language models, that engages in conversation with users. It uses natural language processing to comprehend and respond to user input, serving purposes like providing information or assistance.

Chatbot Illustration Generated using Theme Explorer V1`

4. GPT

GPT, short for Generative Pre-trained Transformer, is a powerful language model. It creates human-like text based on patterns learned from diverse data, showcasing advanced natural language processing capabilities.

Most AI applications such as chatbots or image generators will be built on top of this model but not all.

5. Prompt

A prompt is a specific input or request given to a generative AI, like GPT, to elicit a response or generate content in a conversational manner, as seen in chatbots.

It is the main way to interact with a LLM but we also input files, images and even audio depending on what model you are using.

6. Prompt Engineering

Prompt engineering involves strategically crafting inputs or queries to guide generative AI models in producing desired outputs. It’s a technique to influence the responses of systems like chatbots or language models like GPT.

Some examples of prompt engineering techniques include:

- Few-shot prompting

- ReAct prompting

- Chain-of-thought (CoT)

7. Tokens

Tokens, in the context of language models, are individual units of meaning such as words or characters. They form the basis for comprehension and generation within generative AI, like GPT, when processing text.

You can use OpenAI’s tokenizer to see how many tokens you are using for a prompt.

Generate a recipe list for organic and meat-free dishes for a family of 5. Ensure there are no nuts present in any of recipes and include details about macronutrients.

OpenAI’s Tokenizer

So 29 words is equivalent to around 36 tokens. The conversions will vary depending on which model you are using.

8. Context Window / Context Length

A context window, or context length, in the realm of language models, refers to the number of preceding words or tokens that a model considers when generating or understanding a specific word or token in a sequence of text.

Most recently, OpenAI announced their new GPT-4 Turbo which has a context window of 128,000 which is equivalent to around 300 pages of text.

9. Fine-Tuning

A fine-tuned model is an AI model that has undergone additional training on specific data or tasks after its initial pre-training. This process refines the model’s performance for particular applications or domains.

Common use cases of fine-tuning include creating specialized chatbots that you might see in your mobile banking app or when online shopping.

NatWest recently announced a partnership with IBM to help develop more human-like chatbots using generative AI which will likely incorporate fine-tuning of NatWest’s financial intelligence data to achieve this.

NatWest Partners with IBM

10. Attention Mechanism

An attention mechanism in AI refers to a component that enables models to focus on specific parts of input data when making decisions or generating outputs. It helps capture relevant information, enhancing performance.

11. Temperature

Temperature is a common prompt engineering parameter that influences the randomness of generated outputs. Higher values increase randomness, producing more diverse responses, while lower values result in more deterministic and focused outputs.

For example, suppose we want to generate a sentence that starts with “The sky is”. If we use a low temperature value, such as 0.1, the model might produce something like “The sky is blue and clear”. This is a reasonable and common sentence, but not very creative. If we use a high temperature value, such as 1.0, the model might produce something like “The sky is a canvas of dreams”.

12. Embeddings

Embeddings are numerical representations of words or entities in a way that captures their semantic relationships. They enable AI models, like language models, to understand and process language effectively.

Below is an example of how a sentence might be decomposed into a vector.