The Ethics of Artificial Intelligence: Addressing Bias and Accountability

Artificial Intelligence (AI) is rapidly permeating every aspect of our lives, from healthcare to finance, education to transportation. While the potential benefits of AI are vast, its widespread adoption also raises significant ethical concerns, particularly surrounding bias and accountability. As AI systems become more integrated into society, it is imperative to address these issues to ensure that AI technologies are developed and deployed responsibly.

Understanding Bias in AI

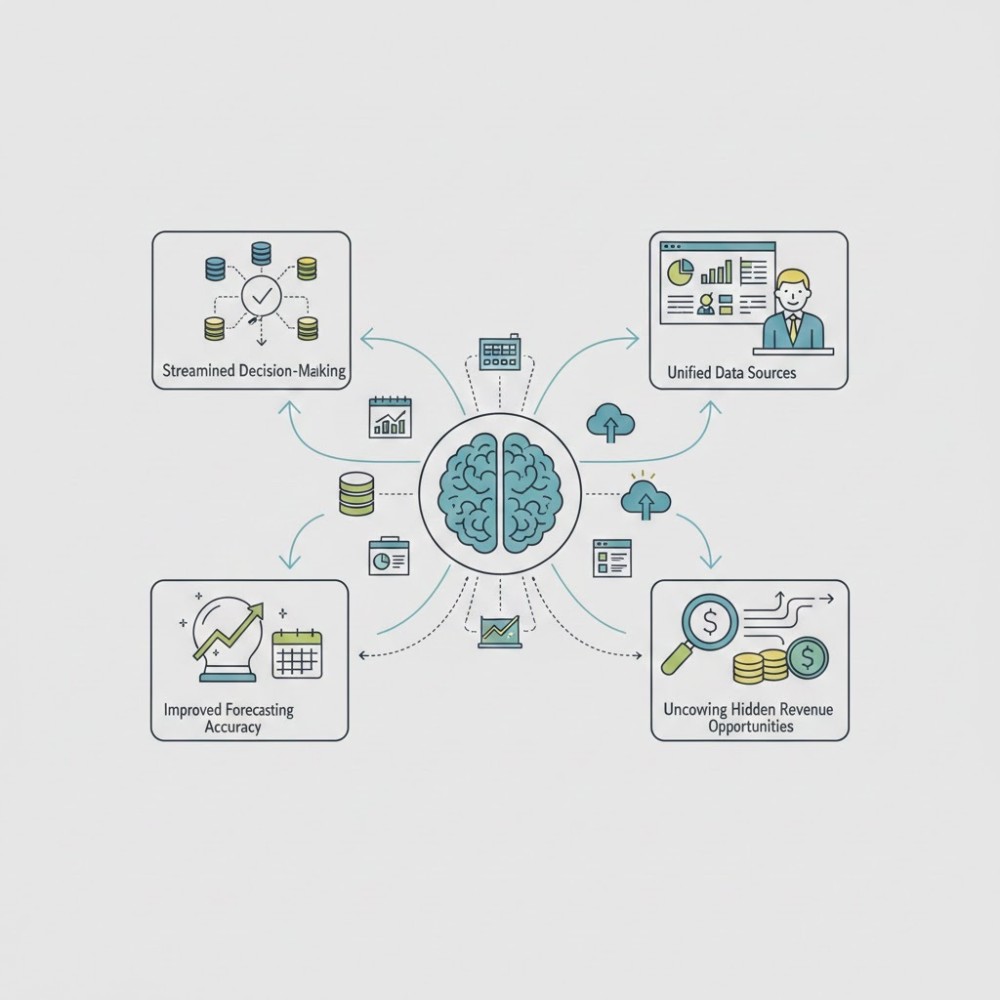

One of the most pressing ethical concerns in AI is the presence of bias in algorithms. AI systems learn from data, and if that data is biased, the AI will perpetuate and even exacerbate those biases. Bias can manifest in various forms, including racial, gender, socioeconomic, and cultural biases. For example, biased algorithms in hiring processes may favor certain demographic groups over others, leading to unfair outcomes and perpetuating systemic inequalities.

Bias in AI can stem from several sources, including biased training data, biased algorithmic design, and biased decision-making processes. Addressing bias requires a multifaceted approach that involves data collection, algorithm design, and ongoing monitoring and evaluation. It is essential to carefully curate training data to mitigate bias and to implement fairness-aware algorithms that proactively identify and mitigate bias during decision-making processes.

The Importance of Accountability

In addition to addressing bias, ensuring accountability in AI is crucial for ethical development and deployment. AI systems can have far-reaching impacts on individuals and society as a whole, making it essential to establish clear lines of accountability for the decisions made by AI systems. However, determining who is responsible when AI systems make mistakes or cause harm can be complex, particularly when multiple stakeholders are involved.

Accountability in AI requires transparency, explainability, and oversight throughout the AI lifecycle. Developers must be transparent about how AI systems are designed, trained, and deployed, allowing for external scrutiny and accountability. Moreover, AI systems should be designed to provide explanations for their decisions, enabling users to understand the rationale behind AI-generated outcomes and facilitating recourse in cases of error or harm.

Regulatory Frameworks and Ethical Guidelines

To address the ethical challenges of AI, governments, industry stakeholders, and professional organizations have begun to develop regulatory frameworks and ethical guidelines. These frameworks aim to establish principles for the responsible development and deployment of AI systems, including requirements for transparency, fairness, accountability, and privacy protection.

Regulatory bodies play a critical role in enforcing these principles and ensuring compliance with ethical standards. By establishing clear guidelines and standards for AI development and deployment, regulatory frameworks can help mitigate ethical risks and promote trust in AI technologies.

The ethical challenges posed by AI, particularly concerning bias and accountability, require careful consideration and proactive measures to address. As AI technologies continue to evolve and proliferate, it is essential to prioritize ethical considerations in their development and deployment. By addressing bias, ensuring accountability, and adhering to regulatory frameworks and ethical guidelines, we can harness the potential of AI to benefit society while minimizing its potential harms. Ultimately, fostering a culture of ethical AI requires collaboration and commitment from all stakeholders, including developers, policymakers, and users, to ensure that AI serves the common good and upholds fundamental principles of fairness, transparency, and accountability.