The Fear of Artificial Intelligence

Artificial intelligence (AI) refers to computer systems that can perform tasks normally requiring human intelligence, such as visual perception, speech recognition, and decision-making. While AI has led to many innovations that benefit society, the prospect of intelligent machines has also long evoked fear and concern among some groups. This apprehension is often referred to as the "AI fear factor."

Reasons for AI Fear Factor

Lack of Understanding

One of the biggest drivers of AI fear is a lack of public understanding about what AI actually is and how it works. The term "artificial intelligence" conjures up sci-fi images of conscious robots and computers turning against humans. But the reality of most present-day AI systems is far less dramatic or dangerous. When people do not comprehend a technology, it is natural for them to distrust it or presume worst-case scenarios.

Surveys show many people cannot correctly define artificial intelligence. They also wildly overestimate the capabilities of current systems. This knowledge gap leaves room for misinformation and unrealistic fears to spread. Education can help demystify AI and alleviate unfounded anxieties. But lack of technological literacy remains a roadblock.

Concerns About Job Automation

Another major source of apprehension is that AI will automate many jobs and displace human workers. It is true that as AI continues improving, it will transform the workplace and make some careers obsolete. Recent years have seen machine learning systems surpass humans at certain tasks in areas like pattern recognition and data analysis. This fuels concerns that AI will create widespread unemployment.

However, experts debate whether AI will actually destroy more jobs than it generates. New professions may emerge to build, operate and maintain AI systems. Human abilities like creativity and empathy will remain difficult to automate. With the right policies in place, AI can augment human skills rather than replace them. But uncertainties about the economic impact contribute to general unease.

Fear of the Unknown

AI evokes the timeless fear of the unknown prevalent throughout human history. People are intrinsically anxious about phenomena they cannot comprehend or predict. When a new technology fundamentally alters society in unforeseeable ways, it triggers unease and opposition. Historically, everything from mechanical looms to electricity faced initial rejection due to fear of the social repercussions.

AI represents the unknown risks and disruptions that all radically transformative technologies bring. Its hypothetical futures range from utopian to apocalyptic. People fear the uncertain consequences of handing decision-making powers over to thinking machines. Science fiction amplifies this anxiety by portraying AI running amok. But vivid worst-case scenarios can crowd out rational evaluation of risks versus benefits. Managing the inevitable uncertainties of AI is critical.

Concerns About Bias

In recent years, studies have shown many AI systems inadvertently automate or amplify biased human decisions. Machine learning algorithms train on data that often contains societal biases and unfairness. Although AI developers strive for neutrality, they can unknowingly encode gender, racial or ideological prejudices into algorithms. Then AI decision-making scales those biases.

For example, some facial recognition tools have higher error rates for women and people of color. Hiring algorithms disadvantage applicants with non-traditional resumes. Targeted advertising systems can exclude underrepresented groups. The black box nature of some AI makes it hard to audit systems for fairness. This valid concern about biased AI disrupting lives adds to general apprehension. More diverse tech teams and explainable AI may help address the root causes.

Loss of Human Agency and Control

Critics argue that over-reliance on AI and automation threatens to undermine human agency and autonomy. As machines get smarter, people may cede important choices to algorithms. But subjective machine decisions could restrict user freedom and control. For instance, AI content filters could limit online expression. Personalized ads might manipulate purchasing preferences. Product recommendation engines could narrow consumer options.

Some believe AI should remain a tool under full human direction. Others insist key decisions with moral dimensions or social impacts require human oversight. Fears that autonomous intelligent systems will constrain free will and control stir AI skepticism. However, thoughtfully defining the balance of power between people and technology can mitigate these risks.

Concerns About Superintelligence

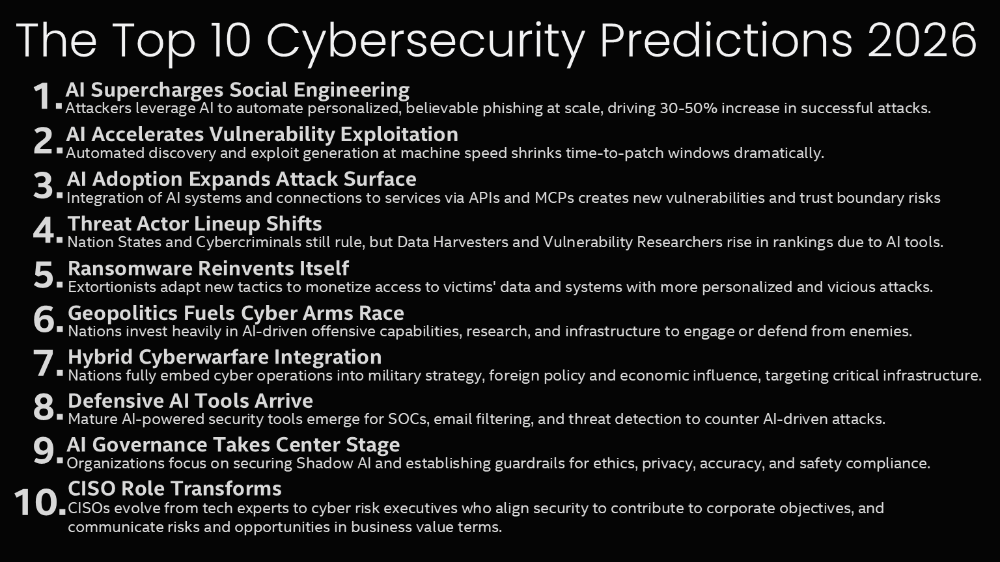

A more speculative reason people fear AI is the possibility of a “superintelligence” arising in the future. While modern AI displays only narrow capabilities, theorists propose that AGI (artificial general intelligence) with the capacity to learn any intellectual task could eventually emerge. Some believe superintelligent AI could initiate an intelligence explosion, recursively self-improving and rapidly surpassing all human intellect.

A superintelligence with incomprehensible processing power, conjectured by nicknames like “Skynet,” alarms many. Its inhuman cognitive abilities and unfathomable motivations could make its decisions catastrophic for humanity. AI safety research seeks ways to align such AI with human values. But fundamental doubts that superintelligent AI could ever be controlled responsibly underlie many doomsday scenarios.

Distrust of Big Tech Companies

Public wariness about AI intertwines with growing distrust of large tech corporations leading AI development like Google, Amazon and Microsoft. Critics accuse big tech of misusing private data, spreading misinformation, avoiding regulation and fomenting social ills like internet addiction. So when Silicon Valley promises transformative AI, people question the benefits and risks.

AI requiring massive datasets and compute resources concentrates power with tech giants. Their opacity exacerbates suspicions that AI research will disproportionately advantage corporate interests over collective welfare. Given the enormous implications, many believe guiding AI for the common good is too important to leave in the hands of Big Tech alone. Tech ethics reforms could help build public trust. But AI progress outpacing accountability stokes fears.

Apprehensions About Autonomous Weapons

Lethal autonomous weapons (LAWs) are among the most feared applications of AI. Also called “killer robots,” LAWs are systems capable of targeting and attacking without human intervention. AI-enabled weapons could select and engage targets faster and more accurately than people. But many oppose granting machines life-and-death decision authority over human lives absent human judgment and accountability.

Abandoning meaningful human control over AI weapons systems strikes many as an unacceptable surrender of morality. Leaders like the UN Secretary General and the Pope have called for banning LAWs. There are also concerns that autonomous cyberweapons could cripple critical infrastructure provoking military escalation. Fears of unchecked AI capabilities enabling devastating conflicts add to general technology misgivings.

Arguments Against AI Fear Factor

Exaggerated Risks

Opponents counter that AI fears are often exaggerated. They argue the risks are speculative or remain far in the future. Today’s AI systems are not capable of universally surpassing or outwitting humans. Artificial general intelligence indistinguishable from human cognition exists only in fiction. While basic tasks are automated, overall technological unemployment remains low. AI catastrophes make attention-grabbing headlines but are unlikely.

According to this view, scaremongering about superintelligent AI is counterproductive. It distracts from addressing more pressing issues like algorithmic bias and workforce displacement. Preventing these immediate challenges does not require restricting AI progress. Critics suggest the AI fear factor often derives from sensationalism and anthropomorphizing. Instead of panic, pragmatic governance and risk assessment are needed.

Potential for Societal Benefits

AI supporters contend that its potential benefits for health, education, science and other public goods outweigh long-term risks. Artificial intelligence is already assisting doctors, teaching students, discovering materials and predicting natural disasters. With responsible development, AI can keep empowering human capabilities. Relinquishing these breakthroughs due to abstract worries sets back human progress.

Banning speculative harms risks forfeiting concrete gains. Proponents argue AI has brought new conveniences and choices to everyday life. Machine creativity augments human expression. Intelligent assistants aid millions. Rather than resign themselves to a "bad future," visionaries work to shape AI positively. Dismissing real progress because of hypothetical risks seems misguided to them. Prioritizing AI's near-term potential over uncertain long-term perils is their stance.

AI Already Embedded in Society

Pragmatists caution that opposing AI development may already be impractical given its expanding capabilities and applications. Much of modern technology relies on machine learning and automation. Facial recognition, finance software, virtual assistants, content filters and product recommendations pervade digital services. Advanced AI powers science, medicine, the arts and more. The fundamentals are deeply woven into industry and infrastructure.

Attempting to relinquish these systems could be highly disruptive. Entire sectors like technology, manufacturing, retail and transportation now depend on AI efficiency. Although concerns about over-automation are fair, successfully banning complex technologies once embedded is rare historically. Critics insist AI fear can inspire sensible limits and oversight but should not wholly obstruct progress. Regardless of its risks, AI is an inextricable reality needing guidance.

Problem Is Human Values, Not Technology

Some thinkers argue AI technology itself is value-neutral rather than an inherent threat. How humans wield AI tools determines their impact. For instance, facial recognition could enhance security or enable oppression based on application. Biased algorithms partly reflect prejudiced data. Autonomous weapons provide capabilities that may be misused. But the underlying technologies have no intentional morality or goals.

From this perspective, AI just expands the repertoire of human action. Its risks and benefits ultimately stem from human choices and institutions. If societies implement AI irresponsibly, problems result. But addressing root causes like inequality, conflict and exploitation could prevent harms. Since progress is inevitable, focusing on instilling justice, ethics and wisdom to govern technology offers the most control. The problem resides in human values and systems, not AI itself.

Preparing for the Future

No matter one's perspective on AI, nearly all experts agree proactive preparation is crucial. The coming waves of change will challenge society one way or another. But measures like planning for workforce transitions, democratizing technology and teaching media literacy can smooth the ride. Policy debates continue, but adapting educational systems to equip citizens of the future is wise.

Some recommend buttressing institutions for oversight, establishing guidelines to encode ethics, and generating mass dialogue about hopes and concerns. Foresight and collective responsibility can direct AI down positive pathways. Technology designed for people and inclusive prosperity is possible. Fear of AI does not have to be paralyzing if preemptive vision and courage guide society.

Conclusion

AI will undoubtedly bring both progress and dilemmas. But if people face the complex journey thoughtfully, perhaps machines can enhance humanity rather than subsume it. This begins with demystifying AI, assessing it rationally, and above all mastering the wisdom that determines how we shape the future.