AI Ethical Risks

AI ethical risks

AI is defined as digital systems that automate or replicate intelligent behaviour. Scientists wanted to create human like machines, with use of machine learning, generative AI, language recognition, face recognition, robotic, computer vision and basically capabilities comparable to human.

AI has a potential contribution to the global economy with USD 15.7 trillion by 2030

According to PricewaterhouseCoopers (2023). However, there are many ethical issues arise from use of AI. There is an AI trend that use machine learning algorithms to analyse what people are likely to read based on their past choices, but this led to an unethical phenomenon named “Filter Bubbles” by which people are fed stories that only reflect their own viewpoint, leading them to gain an ever more biased perspective on the world. When generative AI like Chatbots evolve and improve certain jobs will be displaced and these people will be left out and cause social tension. Firms using AI to scan CVs of job hunters are being challenged for the right to explanation of job application rejection when AI explainability is an ethical concern. The opacity of the inputs/outputs and algorithms cause ethical risk management and governance problem for firms.

According to Schuett (2023) study only the European Commission published the first AI Act in 2021 as comprehensive guidelines to regulate AI which firms can follow but these regulations are still evolving with AI.

Six categories of AI risks

Wirtz et al. (2022) study identified six key categories of AI risk that are relevant to AI deployment that need attention:

1. Technological and Data - eg. algorithm opacity we don't know what AI is thinking and how it makes decision as it evolves through machine learning. It is even more scary when it evolves to have selfwareness like human, it is a rumour that Open AI firm has developed such AI with selfawareness. This implies that transparency is a must.

2. Informational and Communications - eg. Selective information provision with manipulation.

Allow humans to live in a filter bubbles of information, like China's cultural revolution, except this time through our computing devices.

3 Economic AI Risks – eg. Disruption to the labour market by AI to replace roles.

4. Social AI risk – eg. Data privacy and human rights violation due to bias decisions by AI.

If AI is fed with CVs scan of job hunters' information of a male dominance sample data then AI would think most women are not capable of that specific job specification and reject their job application..

5. Ethical AI Risks – eg. lack of empathy in AI that threaten human values in their decisions.

6. Legal regulatory - eg. governance of AI system performance and safety performance, and keeping up with the fast pace AI revolution in technology.

Therefore, firms need to set a vison and strategy to implement AI in an ethical and responsible manner to its stakeholders of shareholders, customers, employees, regulators, communities and business partners.

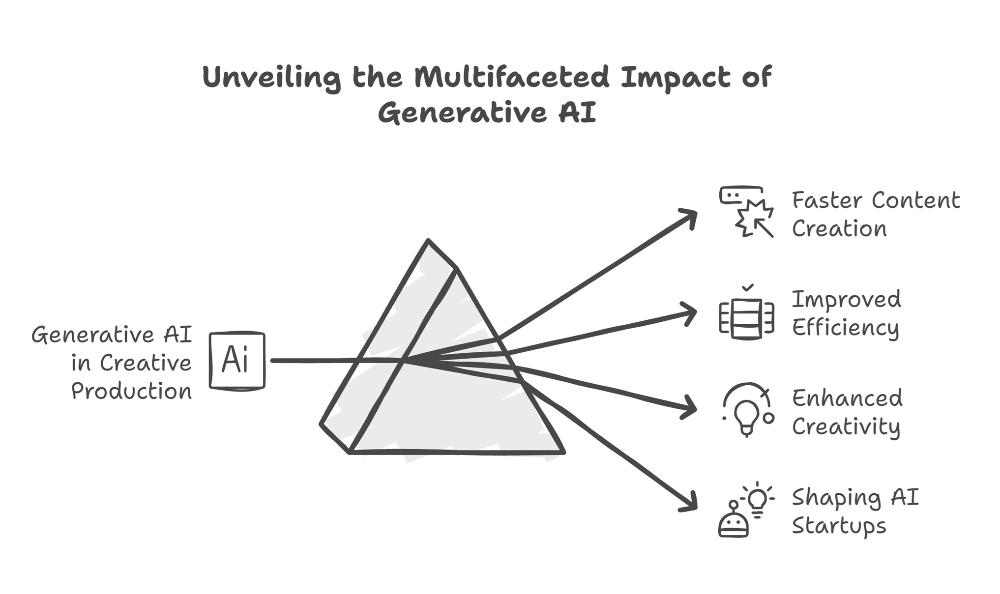

AI Value Creation

When the above risks are controlled then AI can create real value. AI value creation is connected to five major trends according to Brittish Telecom (2020):

1. Filtering bubbles (eg. scanning through view history)

2. Augmented intelligence (eg. Chatbot)

3. Machine learning (eg. Autonomous vehicle)

4. Explainable AI (eg. Facial recognition)

5. Cyber security (eg. Blocking suspicious activities)

Current concerns in AI application in firms:

First concern with managing AI is the keeping up with the regulators and aligning firm with the regulation uncertainty.

The second concern is the quality of the data, as “garbage input” result “garbage output”.

The third concern is the lack of AI experts to build the AI algorithms from the start and avoid blind spots on risks

Conclusion:

Quality data is so important to AI as it depends heavily on it to prevent “garbage in” and “garbage out”. The implication to government policy makers is that data laws is a priority to establish quality data standards that track the integrity of the data processing steps and how it is ethically derived, similar to food labels that track the ingredients and nutrients that guard against the well-being of society.

The algorithms in between inputs and outputs is also important, so algorithms governance is vital to uphold ethical standards of AI deployment, as such, assessment tools and software for AI principles should be applied to design the algorithms and AI systems. AI system should be built with failure detection mechanism such that risks can be identified, quantified, prioritised and control.

Firms should establish strong partnerships with universities to foster research and innovation on responsible AI based on ethical principles, human rights, inclusion, diversity, innovation, and economic growth. Government incentives needed to churn out more AI experts in universities and research and development in AI to capture the real economy of the AI industrial revolution. Firms can work with policy makers and universities to obtain assistance to produce an AI ready workforce.

References:

British Telecom 2020, bt-behind-the-technology. Five key trends in Artificial Intelligence, https://business.bt.com/content/dam/bt-business/pdfs/why-bt/insights/bt-behind-the-technology.pdf

Schuett, J 2023, ‘Risk management in the artificial intelligence act’ European journal of risk regulation

Wirtz, BW, Weyerer, JC, & Kehl, I 2022,’ Governance of artificial intelligence: A risk and guideline-based integrative framework’, Government Information Quarterly, vol. 39, no. 4