There should be no regulation of artificial intelligence. Here’s why.Let’s be intelligent with inte

These days, in the sphere of public debate, there’s a rule I try to remember. Namely, that if I come across the same idea twice in a week, it’s probably a bad idea. And if I see it a third time, it’s surely a terrible one. Ideas that are easily repeated are too simple to do justice to a nuanced problem.

At the moment, there are a lot of bad ideas circulating on the topic of regulating artificial intelligence.

What seems extraordinary is that people who should know better are leading the charge of bad ideas. People like Max Tegmark, physicist and author of bestselling book Life 3.0, or Geoffrey Hinton, an AI scientist nicknamed the “Godfather of AI”.

They and others are sprinting after Elon Musk, who was running solo until recently. It’s as if they were playing a futuristic inversion of Pascal’s wager, and covering their bases just in case an AI God turns out to exist, however infinitesimal the chances.

I will explain here why, for the time being, AI should not be regulated — that there is no present reason to regulate it, and that the arguments for regulation are ill-thought, misconceived and frankly, unintelligent.

If you’re interested in AI and the financial markets, consider joining us at Sharestep, a platform that transforms the stock market into a marketplace of ideas.

Reason 1: It’s Impossible

For a start, regulating AI would mean regulating an industry that doesn’t exist yet. For all the online noise, what we have, at the time of writing, is an impressive prototype in GPT-4, plus another, Google Bard, which doesn’t work, and an endless stream of online accounts promising the art of the possible. There’s also Midjourney, a forum that generates gamer art when the server isn’t crashing. There is no industry to regulate. How do you regulate something that doesn’t exist?

Set your mind back to the dawn of social media, circa 2003. Recall the first version of Facebook. It offered a profile page, an ability to befriend someone, an ability to “poke” others, and that’s about it. There was no news feed, no instant messaging, no notifications, no ads, no video, no streams, no shorts, nothing.

Back then, nobody knew how social media products would evolve. And that’s where we are now with intelligent technology. No one knows what AI products will look like in ten years, and since none of us can foretell the future, none of us can say which regulations will in the end be useful. Image courtesy of marketoonist.com

Image courtesy of marketoonist.com

Reason 2: regulatory capture and corporate favouritism

Regulation is a function of society that, at its best, stops people from swindling and harming one another. But, although society cannot function well without some kind of regulation of industry, this isn’t the full picture.

At its worst, regulation acts as a protector of politically favoured companies from competition. In other words, those companies acquire a regulatory moat. The protected companies are almost always large corporations. In such cases, regulatory barriers to entry are erected, and smaller enterprises struggle to enter the market, even if they have competitive solutions.

This is how large banks, energy companies, telcos and pharmas survive decade after decade, in spite of bloated operations, the inefficiencies of centralisation, and profit capture by senior management and shareholders.

Think about it — banking, for example, is not a resource intensive industry. It’s essentially a data management service. Why do so few new banks join the playing field each decade?

With leaked Google emails about missing “moats”, it does make one wonder why companies like Microsoft and OpenAI, usually averse to regulation, appear to be aggressively pushing for it with AI.

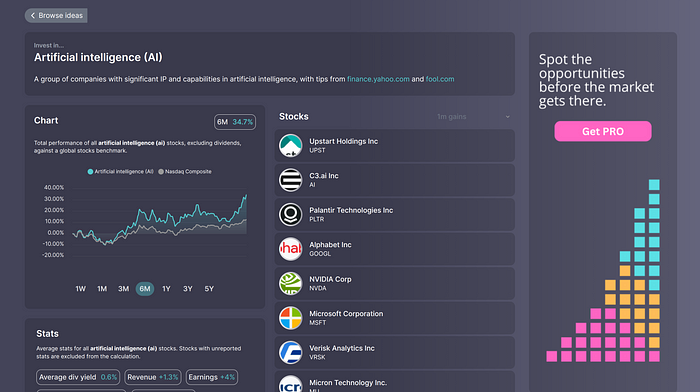

Regulating AI risks cementing large corporations as long-term gatekeepers of the technology. Megacap AI stocks have been rising since recent breakthroughs. Find out more with Sharestep

Megacap AI stocks have been rising since recent breakthroughs. Find out more with Sharestep

Reason 3: the plausible equals inevitable fallacy

There are some very intelligent people leading the digital industries. But introverted folks are often seduced by what could happen, in detriment of what is likely to happen. This seeds a style of paranoid, sci-fi thinking: an acceptance of unlikely tales as if they were probable, or even inevitable. No technology has had more of this treatment than AI.

For instance, several years ago, the tech community had a collective breakdown because of a thought experiment known as Roko’s Basilisk. Without going into details, Roko’s Basilisk makes the logical case for a future AI that will torture virtual copies of ourselves through eternity.

This paranoid style is on display amongst top public figures in the industry. I recently heard Max Tegmark, and a few days later Sam Altman (CEO of OpenAI) speaking anxiously about Moloch, a satanic entity from the Hebrew Bible, and how its mythology relates to artificial intelligence technology.

The founder of this brand of elite, sci-fi paranoia is probably Nick Bostrom, a philosopher who works at Oxford University. Specifically, in his book Superintelligence, he wrote about the paperclip maximiser. In this scenario, a superintelligent AI has been tasked with the sole aim of producing paperclips. It is so focused on the task, and so effective, that it transforms the entire surface of the earth into paperclips, ending humanity and all life on earth.

I propose that there is a flaw in this style of reasoning. Let’s call it the plausible equals inevitable fallacy. You can generalise it like this:

- Imagine any fantastic scenario. Let’s call it X.

- Work backwards to imagine a plausible narrative by which X could happen.

- Now that you’ve imagined a way that X could happen, decide that it will happen.

Let’s give it a try. I’m writing this from my kitchen at the moment. Many objects surround me. The one that catches my eye is the electric toaster. Is there a scenario by which electric toasters could lead to catastrophe for humanity, maybe for life on earth?

Well, perhaps not as they are now, but electric toasters could evolve. Indeed, it’s not out of the question that toasters in future could be given more control over breakfast. It isn’t hard to imagine a toaster that, for example, could slice the sourdough itself. And, for that matter, a toaster that you could program to produce toast at a set time in the morning. After all, it would be pretty cool to have your toast ready when you enter the kitchen after waking.

But what if some of the cheaper models had flaws? What if they began spitting smouldering toast into the kitchen in the middle of the night? These kinds of accidents could lead to house fires. In densely populated developments, such accidents could lead to huge loss of life. In fact, it isn’t out of the question that entire cities could burn to the ground…

Of all the concerns surrounding AI, the following two are most frequently raised: mass dissemination of harmful propaganda, and chaotic rises in unemployment. I suggest that these are also instances of the plausible equals inevitable fallacy.

At the end of the day, almost anything can plausibly happen. But most things we imagine could happen, are extremely unlikely to happen. The future almost never turns out as we expected. Tech leaders have been debating the role of Moloch in AGI. Image courtesy of allthatsinteresting.com

Tech leaders have been debating the role of Moloch in AGI. Image courtesy of allthatsinteresting.com

Reason 4: there is no present need to regulate AI

Finally, and crucially, there is no present reason to come down hard on AI with regulation. Take a look around you. Nothing has happened. No lives have been destroyed, no countries torn down, no jobs lost. At this moment, all we have is a successful chatbot. This may change in future, and we should certainly be vigilant. But it’s also wise to be vigilant of hysteria.

So what should we do with AI?

Let’s sign off with two useful things we could do right now to transition AI technology into society.

Digital royalties for creators. This includes artists, photographers, writers, musicians, translators, researchers, open-source programmers, and others who produce valuable information for a living.

Stealing their material, repackaging it in AI models, and selling it for a profit is deeply immoral. We must correct this. Moreover, incentives need to be in place so that creators continue to have reason to produce art, knowledge and science. Without them, in the end, there can be no AI models.

Keep the apps away from the children. It is baffling that we still give children unrestricted access to smartphones. Many smartphone apps are, essentially, highly addictive digital drugs. And yet, we happily expose young children to them.

Something useful would be to classify smartphones for adult use, alongside alcohol, tobacco and gambling. This would protect developing brains from the attention-monopolising products that have evolved in the last twenty years, and prevent children from developing patterns of addiction and depression.

Let’s not waste time. We have some catching-up to do with the regulation of digital technology. Let’s get the basics done first. They’re overdue.

If you liked this article, pay us a visit at Sharestep. We’re a small startup focused on helping people to translate insights about the world into concrete investment opportunities.

Anyway, thanks for reading, and see you next time.