The Rise of Enhanced YouTube Shorts: Exploring Automated Narratives

Elevating YouTube Shorts: A Symphony of Creativity Unleashed

As the perpetual generator of creative sparks, my mind dances with a plethora of fresh project concepts. In my latest venture, I found myself captivated by the surge of automated YouTube Shorts— a fusion of Reddit narratives seamlessly intertwined with the captivating backdrop of Minecraft gameplay. While undoubtedly innovative, I sensed an untapped potential for enhancement.

In the realm where content reigns supreme and efficiency is the linchpin, an idea began to germinate within me. Why not elevate these shorts to a whole new level? Thus, my mission unfolded— to transmute the mundane Minecraft canvas into a spectacle of AI-generated visuals tailored to each post, coupled with a symphony of top-tier voice synthesis.

The Execution Blueprint

The game plan, both straightforward and enthralling: construct a system capable of autonomously birthing YouTube Shorts through a fusion of APIs and cutting-edge tools. My foray into Reddit, a treasure trove of limitless topics, became the source material for these short narratives. The vibrant visuals sprang to life courtesy of Replicate’s Stable Diffusion API, a powerhouse for crafting high-resolution images. The voiceovers, an auditory masterpiece, were meticulously sculpted using 11Labs’ voice generation technology. The final tapestry was woven together by moviepy, and with a seamless connection to YouTube’s API, the broadcast was set ablaze. In the blink of an eye, a revitalized rendition of YouTube Shorts emerged.

The Creative Assembly Line

Delving into the Depths of Reddit Narratives

Our automation machinery commenced its rhythmic hum with the first gear— extracting riveting tales from the abyss of Reddit. Armed with PRAW, Python’s Reddit API Wrapper, I forged a link to the Reddit API using the necessary credentials. My subreddit of choice? The hauntingly alluring r/shortscarystories, a perfect reservoir for bite-sized thrillers that harmonized seamlessly with my vision for YouTube Shorts.

Through PRAW’s sorcery, a conduit was established to the Reddit API, posts cascading in a digital waterfall. Employing a batch retrieval strategy, I gracefully handled this influx, collecting N posts with each iteration until the desired count graced my creative palette.

Crafting Data Alchemy: Transforming Harvested Gems into a Digital Tapestry

Venturing further into the intricacies of my project, the harvested data underwent a metamorphosis akin to alchemical transmutation. Each nugget of information, extracted from the depths of Reddit, was meticulously molded into a comprehensive dictionary. This digital parchment documented not only the essence of each post, encapsulating title, content, timestamp, upvotes, and the count of comments but also the soul of the narrative woven in the Reddit realm.

This burgeoning compendium of data, akin to a modern-day grimoire, was not left in its primal form. No, it underwent another evolution, ascending to the realm of Dataframe wizardry. The transformation into a structured Dataframe was not merely a cosmetic change but a strategic maneuver for the forthcoming analysis and manipulation, a canvas where each data point would harmonize in a symphony of insights.

In this alchemical process, the once-scattered fragments of information found cohesion, creating a digital masterpiece ready for the unveiling of hidden patterns and revelations. The Dataframe, a testament to the fusion of creativity and analytical precision, stood as the cornerstone of the next phase in this visionary endeavor.

Final Dataframe for r/shortscarystories

Crafting Allure: Titles and Descriptions Unveiled

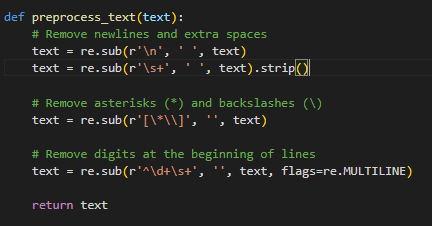

Once the number of posts increased, it was time to focus on creating captivating titles and compelling descriptions for our YouTube Shorts. These components play a crucial role in captivating viewers and telling a compelling story within the limits of brevity. Every narrative was carefully refined to create a smooth introduction to the overall content creation process. Our aim was not just to craft titles and descriptions, but to infuse them with a magnetic appeal that draws viewers into the immersive universe of each YouTube Short. This meticulous process set the stage for the unveiling of digital masterpieces, with each title and description acting as a gateway to a meticulously crafted and creative world. Pre-Processing Function

Pre-Processing Function

Key housekeeping tasks included:

- Ousting newlines and redundant spaces

- Banishing special characters like asterisks (*) and backslashes (/)

- Addressing digits at line starts

With a clean text, GPT-4 took over, churning out titles and captions for our Shorts. A meticulous fine-tuning of prompts refined the output, ensuring it jibed with the engaging style of YouTube Short titles.

Crafting Image Prompts for AI Artistry: The Secret Sauce

An indispensable part of AI-driven content assembly, image prompts truly set this YouTube Shorts creation process apart. As the creative springboard, these prompts pave the way for producing visually rich elements, like images and thumbnails, that give an edge to my Shorts. Acting as the guiding light, image prompts enable AI models like Stable Diffusion to produce visuals that go hand in hand with the spirit of the content.

The code is employed twice

Image Prompts: Generates six short, descriptive prompts for image generation, based on a provided video script.

Thumbnail Prompts: Creates a single, concise prompt for generating video thumbnails, all while ensuring content remains safe and engaging.

The final prompt used was:

Generate 6 short one liner prompts for DALLE-2 / Midjourney / Stable Diffusion prompt image generation. The prompt I give will be the script for a video. Prompts should be very short but descriptive with no NSFW content.

Image Generation with Stable Diffusion

Next on the assembly line, I employed the Stable Diffusion API from Replicate to craft images. This API utilizes a diffusion model to create

visually striking images that perfectly complemented the narrative.

Creating images for engaging vertical video content came with its own set of challenges, especially when relying models like DALL·E and Midjourney:

- Aspect Ratio Mismatch: DALL·E and Midjourney were primarily designed for standard image generation, which typically follows a 1:1 aspect ratio. When applied to vertical videos, these models may produce images that are ill-fitted to the vertical format.

- Engagement: Short form content like Shorts often rely on captivating visuals to maintain viewer engagement. In my experience Stable Diffusion ability to produce visually striking images was far beyond DALL-E or Midjourney’s.

The function, generate_stablediffusion_image takes an image prompt as input and used specific parameters to create images, including dimensions, number of outputs, and guidance scale. For thumbnails, one image was generated, while for the main content, a series of prompts inspired by Reddit post titles were used.

Image Generation Function

The code then systematically saved generated images to a specified directory. This included the thumbnail and a set of sequentially named images based on the Reddit post titles.

Saving Images to Folder

Synthesizing Voice with 11Labs

I used 11Labs' voice generation API to make our videos more personal. Audio Generation Function

Audio Generation Function

The core process is handled by the generate function, which converts preprocessed Reddit post content into human-like speech. The chosen voice "Nicole" and model "eleven_monolingual_v1" ensure natural-sounding narration. The code then systematically saves the generated audio with an organized filename, maintaining consistency.

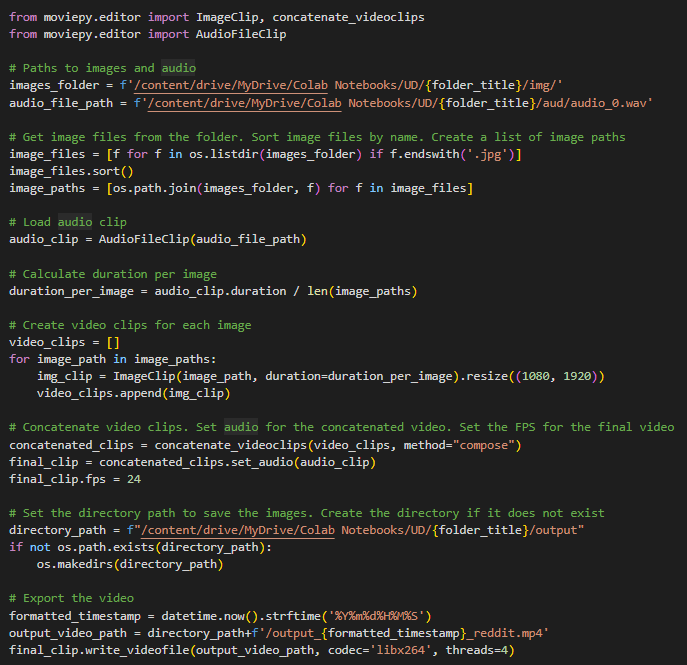

Stitching Videos with ffmpeg

With the narrative, images, and voiceover ready, the next step was to stitch them together into a video. For this, I used moviepy, a versatile tool that

can handle a wide range of video, audio, and image formats.

Here I load all the generated images and audio voiceover from their respective their folders. I then calculate the duration for which to show each image by dividing with total video length. Then I set the final clip’s framerate and finally concatenate all the files and write the final video to the filesystem. Final Video Stitching Code

Final Video Stitching Code

Since most Shorts show voiceover text on screens I did something similar. I took the video script, used some OpenCV magic to get the words on the screen. There’s a hitch though. The words don’t match up with the voiceover. They just pop up based on the total number of words for the length of the video. Still scratching my head on this one so if there’s a cooler workaround you know, hit me up. Adding Captions to Video

Adding Captions to Video

Broadcasting to YouTube with YouTube API

The final step was to push the videos to YouTube. Using the YouTube API, I automated the upload process, including setting the title, description, and tags. Short Upload Function

Short Upload Function

I hit two big roadblocks here:

Channel Ownership and Uploading Precision

First up was the issue of who owns the channel and where exactly my Shorts are landing. I made a new channel to post these Shorts, but it was linked to my personal account. This made my Shorts end up on my personal channel. The solution was to confirm the ID for the new channel and pass that as an additional parameter into the body.

OAuth2.0 Verification in Google Colab:

The second problem was with the Google Colab authentication, specifically OAuth2.0 Verification. Google Colab is a useful for lots of tasks but when it comes to authentication with external services like YouTube — not so much. OAuth2.0 required a localhost link, but because Colab is server-hosted, that wasn’t happening. My fix was running the code on my machine, getting a token via Firefox, and then using that for my Colab script.

The Product

And there we go — the last piece in the puzzle, the finish line — my unique take on Shorts. A few hurdles and some trial and error later, I’ve managed to fill up my new channel with these Shorts, some accompanied by captions though not perfectly synced just yet.

I also navigated the tricky path of authentication, took a brief detour to run it locally and then got back to my trusty platform, Google Colab. So, here it is — a fresh batch of Shorts, nicely lined up on the new channel. Of course, there’s always scope for tweaking and betterment, but this is what I’ve got at the moment. If you have any suggestions or ideas, feel free to share — let’s keep making the digital world even more fun, one Short at a time!

You’ll find the link to my GitHub and YouTube channel where I’ve uploaded the code and the Shorts, as well as a couple of individual Shorts below. I’ve linked two Shorts, one featuring on-screen captions and the other without. #inteligent #artificial #ai #shorts #earn #money

What’s Next?

The success of this YouTube Shorts automation project opens up an exciting vista of possibilities. The truth is, automated content generation isn’t the future, it’s the present. It’s shaping the approach to content creation and pushing the boundaries of what’s possible.

Up in the pipeline, my key objective is to fine-tune the synchronization between the voiceover and on-screen captions to further amplify the user experience.

If you have any burning questions or you’re curious about exploring the codebase, don’t hesitate to give me a shout. Let’s plunge together into this thrilling world of automation!