The SourceLess Vision. Building a Safe and Free Digital Future for All

The Price of Free: Why Your Data Is the Real Currency

We’re not paying for our social media platforms and we’re not paying for browsing the internet, right? Wrong. We are paying, just not with money.

Every time you sign up for an app, you click through a privacy policy and terms of service that most people never read. In doing so, you’re making a transaction: your personal data in exchange for access to a service. It’s a trade so normalized that we’ve stopped seeing it as a trade at all. But it is. And now we’re just starting to understand the actual cost.

In an interview with TechTalk channel, Ron De Jesus, (former Chief Privacy Officer at Grindr and Tinder), puts it bluntly: “A lot of the times people tend to forget that they are paying for services with their data. When we’re signing up for an app — Instagram, whatever the case may be, we’re clicking through those privacy policies and terms of service and we are essentially giving up large swaths of data in order to utilize those services.”

This wasn’t always the model. In the early days of tech, companies collected data to improve products. More information meant better recommendations, faster searches, smarter algorithms. It made sense. But somewhere along the way, the logic was compromised. From “we need this data to serve you better,” it turned to “the more data we collect, the more we can sell.”

De Jesus describes what he witnessed at major platforms: “The teams I’ve worked with internally at these apps, it’s the more data the better. Maybe I need this five-year-old data to develop this new feature. It’s this sense of the more data I collect about someone, the better decisions I can make to sell them products and services.”

A chess game doesn’t need to know your location just like messaging app doesn’t need your browsing history or a photo-sharing platform your biometric data. But they collect it anyway. Because they can. Because we accept it.

The Side Effects of Complacency

Most people are too busy dealing with life to also deal with these things. Others have simply given up saying: “Everything’s already out there. My data is already gone. What’s the point?” And, yes, that’s a seductive argument. Why fight a losing battle? Why read privacy policies if the damage is already done?

This attitude is dangerous and self-fulfilling. When we, as consumers stop caring, the companies get full green light and stop listening altogether. When no one complains about dark patterns and exploitative practices, companies have no incentive to change.

Complacency turns into the permission.

But you have rights. Real, enforceable rights.

The European Union passed the Digital Services Act in 2024. Twenty U.S. states now have privacy laws that give consumers the ability to delete their personal information, access what companies have collected about them, and opt out of data sales. California’s law allows you to request a complete copy of everything Meta, Google, or any other company has on file. A Tinder user who made this request was shocked at the volume, years of data, preferences, conversations, location history, all meticulously catalogued. The FTC is suing Google, Meta, and Amazon for antitrust violations and congress is drafting federal privacy legislation.

The Illusion of Choice

Even when companies do offer privacy controls, they’re designed to obscure rather than clarify. You get a cookie banner with two options: “Accept All” or “Reject All.” There’s no middle ground. No granularity. The easier path is always “accept all.” The harder path, reading through settings, toggling individual permissions, understanding what each one means, is deliberately made tedious.

De Jesus advocates for a different model: “It’s a sense of granularity. I don’t have to give you my universe of information. You don’t need my SSN to let me play a crossword puzzle. It’s having the sense of what is actually required for the service and giving me that control around the personal information that I want to divulge.”

And besides all that, over the past year, a cascade of very public failures from search‑function glitches and sudden outages to outright bans has reminded us that the internet we rely on is a service, at times, a faulty one, we shouldn’t blindly trust.

The real problem is that a handful of companies control the infrastructure. They decide what you can see and what you can say. They decide who gets access to your information and who doesn’t. And when they change the rules, there’s not much you can do about it.

This can be devastating for online creators who put years and so much work into building their audiences. And then the algorithm changesr the policy shifts, or the account gets flagged. And suddenly, the income stops because they didn’t own the audience. They rented access to it. The platform owned the relationship. When the terms changed, the creator had no choice but to accept it or leave. And sometimes he doesn’t even have that choice.

As we said in previous articles this is the dependency trap. The more you rely on a platform, the more leverage it has over you. The more users are locked in, the more data the platform can extract, and the more it can charge advertisers for access to that data.

The AI Dilemma

Artificial intelligence adds another layer of complexity. AI models are being trained on personal data scraped from the web — your photos, your SSN, your posts — without your consent or knowledge. This data is feeding systems that will shape the future of technology and will impact us all.

But AI could also be part of the solution, like an AI tool that reads through all your privacy settings across every platform, summarizes what each company knows about you, and tells you exactly what to delete and what to opt out of. It could automate the tedious work of data hygiene.

However, there is a catch: you’d have to give that AI access to your data first. Which brings us back to the original problem: trust.

The Permanence Problem

Once your data is breached, and statistically, it will be, it’s gone forever. Not lost. Gone. On the dark web, in criminal databases, sold and resold across underground markets. There’s no getting it back. No deletion request that reaches into the hands of hackers and erases what they’ve stolen.

The only defense is prevention: limit what you share, delete apps you don’t use, enable two-factor authentication, practice basic data hygiene. But even this is a losing game. You can’t control what third parties publish about you. You can’t prevent your information from being scraped and aggregated. You can’t opt out of government databases.

As De Jesus points out: “Once it’s on the dark web or even on the web in general, it’s literally there in perpetuity. We can take steps to limit the information we have exposed, but unfortunately the stuff that’s already out there — we’re at the mercy of the folks that have access to that.”

New Paradigm in the Making

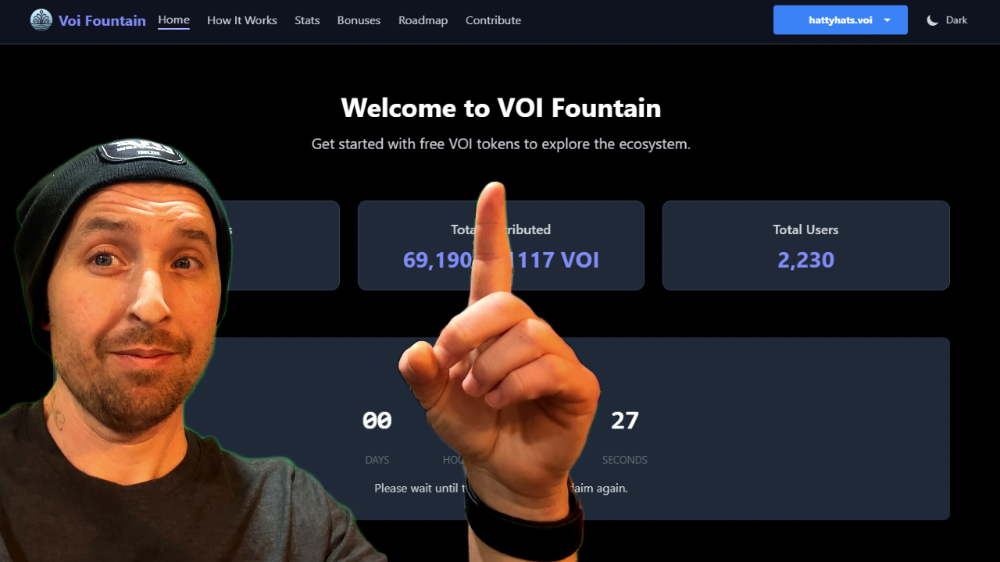

What we talked about so far is part of the broad context that called for and motivated the vision behind SourceLess: The New Web (Web3) — a decentralized, human-centric internet built on blockchain technology, quantum encryption, and identity-based ownership. In this new environment, every user owns their data, controls their identity, and interacts securely without the interference of third parties. True freedom online cannot exist without trust and trust cannot exist without verifiable security.

SourceLess integrates blockchain encryption, zero-knowledge proofs, and decentralized authentication through STR.Domains, giving every user a permanent, tamper-proof digital identity.

Through these technologies, users can safely communicate, transact, and collaborate without the risks of data theft, censorship, or unauthorized surveillance.

SourceLess is returning to technology that empowers people not exploits and restricts them. The ecosystem is designed to be inclusive, transparent, and borderless by combining all these components and aspects of our digital life:

- STR.Domains gives individuals and organizations a verified Web3 identity — the foundation of digital ownership.

- StrTalk.net ensures real-time, encrypted communication, protecting personal and professional exchanges.

- Ccoin Finance merges traditional and decentralized finance, offering a private, borderless banking experience.

- ARES AI provides intelligent automation with privacy-first logic, adapting to various industries like healthcare, education, and business management.

- SLNN Mesh Network ensures secure data transmission across a censorship-resistant, global digital infrastructure.

These technologies are not perfect. They’re still developing. But they’re built on a different principle: you own your data by default, not by exception. Companies should be as transparent as they possibly could without the need for regulation telling them to do so.

Wrapping up

The internet was intended as a tool for human connection and knowledge. Somehow, on the way, it got turned into a machine for extracting value from human behavior. With every click, search, message and location, we are feeding algorithms designed to predict, manipulate, and monetize us.

SourceLess doesn’t promise to fix the internet overnight. But it offers a different foundation: one where your data belongs to you, where your identity is permanent and portable, where your communication is private by design.

The old model of free services in exchange for your data is breaking down. Regulation is coming. Public awareness is rising and alternatives are emerging.

Just remember: Your data is valuable. Stop giving it away for free.