AI Copyright Battles: Artists vs Algorithms

AI Copyright Battles: Artists vs Algorithms

Introduction

The meteoric rise of generative AI has revolutionized the creative landscape. Tools like DALL·E, Midjourney, ChatGPT, and Stable Diffusion now enable users to produce artwork, stories, music, and even films — all with a few prompts. But this new wave of machine creativity has also ignited a fierce debate: Who owns the art — the artist, the user, or the algorithm?

At the heart of the matter is a fundamental clash: traditional human artistry and copyright law versus AI-generated content trained on the works of millions — often without consent or compensation. As legal systems scramble to catch up, and artists protest the mass ingestion of their intellectual property, we are witnessing one of the most critical copyright battles of the digital age.

This article explores the core issues, key cases, stakeholders, ethical dilemmas, and the evolving legal frameworks in the battle of Artists vs Algorithms.

1. The Rise of Generative AI in Creative Industries

1.1 What is Generative AI?

Generative AI refers to systems capable of creating new content — text, images, audio, video — by learning patterns from large datasets. These systems include:

- Text: ChatGPT, Claude, Gemini

- Images: Midjourney, DALL·E, Stable Diffusion

- Music: Suno AI, Aiva, Jukebox (by OpenAI)

- Video: RunwayML, Sora (OpenAI), Pika Labs

Trained on vast corpora of internet data, including copyrighted works, these models can generate content in seconds — often in the styles of real, identifiable human artists.

1.2 Democratization of Creation

AI has empowered non-artists to create professional-grade art, music, and writing, sparking a creative revolution. Small businesses now generate logos without designers, hobbyists produce music without instruments, and students write essays with AI help.

However, this democratization has come at a cost — many of these tools are trained using copyrighted content scraped from the web without permissions, sparking backlash from creatives whose styles, voices, and ideas are being mimicked.

2. The Legal Quagmire: Who Owns AI-Generated Content?

2.1 Current Legal Gray Areas

Most countries' copyright laws were written in an era when only humans could create. But now, AI models generate content autonomously. The key legal questions include:

- Can AI-generated works be copyrighted?

- Does training AI on copyrighted material constitute infringement?

- Who is liable for unauthorized reproduction — the user or the developer?

- Is stylistic imitation a copyright violation?

In many jurisdictions, AI-generated content is not eligible for copyright protection unless a human exercises significant creative control — a definition still under debate.

2.2 Landmark Legal Cases

Getty Images vs. Stability AI (2023–)

Getty sued Stability AI (creator of Stable Diffusion), claiming the model was trained on millions of Getty’s copyrighted images without permission, embedding their watermarks and visual styles.

Key Issue: Whether training a model on copyrighted material without a license violates copyright, even if the output isn’t a direct copy.

Andersen v. Stability AI (2023–)

A group of artists filed a class-action lawsuit against AI companies alleging that their copyrighted artworks were used to train image-generating models without consent.

Key Issue: Whether generative AI tools are infringing artists' rights by allowing replication of distinct artistic styles.

New York Times vs. OpenAI & Microsoft (2023)

NYT sued OpenAI and Microsoft, arguing that ChatGPT reproduced parts of its articles verbatim — and that its models were trained on NYT’s proprietary data without consent.

Key Issue: Whether LLMs can be held accountable for regurgitating copyrighted text and whether training on such content is fair use.

3. Artists’ Concerns: Theft or Inspiration?

3.1 Style Mimicry and “Creative Identity Theft”

Visual artists have found that tools like Midjourney or Stable Diffusion can generate images in their signature style — effectively replicating years of artistic development in seconds. Prompts like "in the style of Greg Rutkowski" were frequently used, sparking outrage.

Musicians, too, have faced similar issues — AI models can now recreate voices and musical styles. In 2023, a viral AI-generated track imitated Drake and The Weeknd, leading to its removal from platforms — but raising alarms for the music industry.

3.2 Economic Impact

Freelancers, illustrators, and concept artists face declining demand as businesses turn to AI. Designers report job losses, publishers opt for AI-created book covers, and stock image creators see falling royalties.

For many, the core concern is economic displacement — AI is profiting from their intellectual property while offering them no compensation.

3.3 Lack of Consent and Credit

Artists demand three things:

- Consent to use their work in AI training

- Credit when their style or content is used

- Compensation for commercial use of AI-generated derivatives

So far, most AI companies have not offered opt-out mechanisms or revenue-sharing models — fueling resentment.

4. Arguments from AI Companies and Technologists

4.1 The “Fair Use” Defense

Many AI developers claim their use of copyrighted content for training falls under “fair use” — a U.S. doctrine allowing limited use of copyrighted material without permission for transformative purposes such as teaching or research.

They argue that AI models don’t store or replicate original works, but rather learn patterns, akin to how human artists study others.

4.2 Innovation and Public Benefit

AI companies argue that stifling model training with strict copyright enforcement would hinder innovation, research, and public access to transformative tools.

They compare training AI on art to how a student studies thousands of paintings — learning, not stealing.

4.3 Opt-Out Mechanisms (Reactive, Not Proactive)

Some platforms, like OpenAI and Adobe, have introduced opt-out tools, allowing creators to exclude their data from training. However, these have been criticized as being retroactive and hard to enforce — the damage may already be done.

5. Emerging Solutions: Can There Be a Middle Ground?

5.1 Licensing and Compensation Models

To bridge the divide, proposals have emerged for licensing systems where artists are paid if their work contributes to AI training datasets — akin to music royalties.

Examples:

- Shutterstock and Adobe Firefly pay contributors whose content was used in AI training.

- Spawning.ai helps artists find if their work was scraped for AI and submit takedown requests.

These approaches offer a path toward ethical AI — compensating creators while enabling innovation.

5.2 Watermarking and Content Authentication

Organizations like Content Authenticity Initiative (CAI) and tools like C2PA are pushing for watermarking systems that embed metadata into AI-generated content, making it easier to track and label.

This helps:

- Identify what’s human-made vs AI-generated

- Enforce platform policies (e.g., AI-labeled content in elections)

- Support copyright audits

5.3 Style Opt-Out Registries

Artists are advocating for global registries that allow them to list styles or works they don’t want included in training datasets. However, implementing this at scale — especially for decentralized scraping — remains a technical and policy challenge.

6. Global Regulatory Landscape

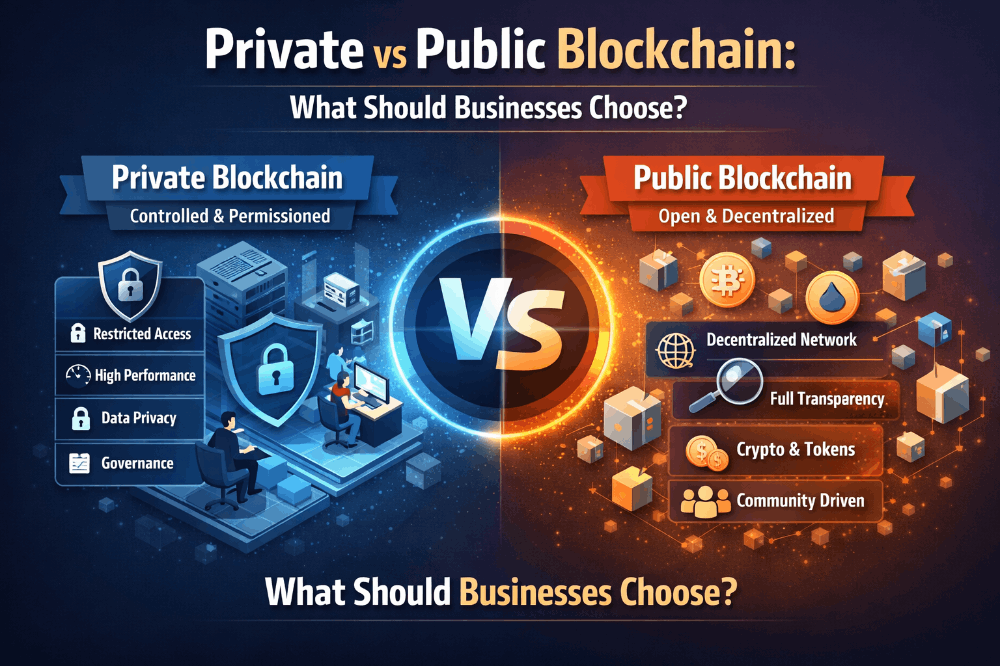

6.1 United States

Still largely relying on court cases to set precedent. The U.S. Copyright Office clarified in 2023 that AI-generated works without human authorship cannot be copyrighted. Legal battles like Getty, Andersen, and NYT vs. OpenAI will shape future rulings.

6.2 European Union

The EU AI Act, expected to be implemented by 2026, mandates transparency from AI developers — including disclosure of training data sources. The EU also supports stronger copyright protections, especially for the creative sector.

6.3 United Kingdom

Consultations are ongoing, balancing support for AI innovation with artist protections. Proposals include giving creators the right to opt-out and receive compensation for training uses.

6.4 Japan, China, and Others

Japan has adopted a more AI-friendly stance, allowing wide data use for AI training, even from copyrighted sources. China, meanwhile, enforces stricter AI content labeling rules and is exploring AI-specific IP legislation.

7. Philosophical and Ethical Dimensions

7.1 What is Creativity?

If AI can replicate art, music, or literature convincingly, is creativity still uniquely human? Some argue that AI “creates” without feeling, intention, or originality — making it a tool, not an artist.

Others counter that if the result evokes emotion and meaning, the origin may be irrelevant. The debate is redefining what it means to be an artist in the digital age.

7.2 The Future of Human Expression

Artists fear being outcompeted or replaced. But others believe AI will become a collaborator, not a competitor — allowing artists to scale their ideas, explore new styles, and offload routine tasks.

The focus may shift from production to curation, from execution to imagination.

7.3 Cultural Preservation and Homogenization

If AI training is biased toward Western or commercial datasets, it risks erasing marginalized voices, indigenous art, or non-digitized traditions. Ensuring diversity in training data and fair representation is critical to avoid cultural homogenization.

8. Case Studies: Real-World Flashpoints

8.1 The “Heart on My Sleeve” Music Scandal

An anonymous artist named Ghostwriter977 used AI to generate a song mimicking Drake and The Weeknd. The song went viral, and the music industry scrambled to respond.

Outcome: The song was removed from streaming platforms, but sparked a broader debate on AI impersonation, deepfakes, and identity rights.

8.2 Zarya of the Dawn Comic Book

An AI-assisted comic titled Zarya of the Dawn, created with Midjourney, was initially granted copyright by the U.S. Copyright Office — but later had the AI-generated portions stripped of protection.

Impact: Highlighted the murky waters of human-AI collaboration and the difficulty of assigning authorship.

8.3 Anime Artists vs. AI Imitation

In Japan and Korea, anime artists organized against AI models that copied their visual styles and published imitation works. Platforms like Pixiv updated their policies to label and restrict AI-generated content.

Impact: Strengthened grassroots digital activism and artist unions advocating for legal reform.

Conclusion: Toward Ethical and Equitable AI Creativity

The AI copyright battle is not merely legal — it’s cultural, economic, and philosophical. Artists are right to demand fairness, recognition, and compensation for their contributions. At the same time, generative AI holds immense potential to unlock creativity for billions.

The path forward lies not in halting progress, but in designing systems that honor creators, respect rights, and encourage innovation. Governments must craft agile laws. Companies must embrace ethical data practices. And society must engage in open dialogue about what kind of creative future we want.

As the dust settles on the Artists vs Algorithms debate, one truth remains: the soul of creativity — human or machine-assisted — must never be stripped of its meaning, intent, and respect.

Would you like this formatted into a PDF or Word file with sections, footnotes, or case law citations? I can also generate visuals or infographics to support this article.