The Competitive Dynamics of MEV Programming Languages: Crafting the Swiftest Bot!

Selecting the right programming language

for your projects poses a formidable challenge, as preferences vary widely among individuals. Factors such as speed, readability, and familiarity with a specific language contribute to this diversity of opinions, making it a common and intricate decision-making process.

Having navigated this terrain multiple times in the past, I found myself delving into the realm of six different programming languages. While the journey was undoubtedly enriching, it eventually led me to discontinue the use of C# and Java, refining my focus on languages more attuned to the nuances of modern development.

In the Miner Extractable Value (MEV) space, the landscape is dominated by four mainstream programming languages: Python, JavaScript, Rust, and Golang. However, for the purpose of this exploration, I will concentrate on the first three. The aim is to conduct a rigorous speed performance benchmark, creating scenarios that closely emulate real-life trading situations. Through this benchmark, readers will glean valuable insights into the competitive dynamics of each language stack in the context of MEV.

Golang, despite its widespread adoption in the blockchain industry, is intentionally excluded from this comparison. The rationale behind this decision lies in its current deficit of open-source projects directly related to MEV, in contrast to the other three languages. The overarching goal of this benchmark session is to maintain a focus on mainstream relevance, considering the popularity of language stacks and the libraries most likely to be embraced by developers.

In summary, the process of selecting a programming language involves navigating a myriad of considerations, and the preferences expressed by developers are as diverse as the languages themselves. This exploration aims to shed light on the intricacies of this decision-making process by offering a focused analysis of Python, JavaScript, and Rust, ultimately providing readers with a nuanced understanding of the performance dynamics inherent in MEV development.

The Methodology Unveiled

In the preceding week, I introduced three Miner Extractable Value (MEV) templates crafted in Python, JavaScript, and Rust. Today, I embark on a comprehensive exploration, leveraging the same codebase to subject crucial methods utilized in MEV trading to rigorous benchmarking. These methods encapsulate a spectrum of tasks, each reflecting routine activities performed by MEV searchers. The spotlight is on gauging the performance of our system while executing specific tasks that are integral to the MEV trading landscape.

The identified methods and tasks span a diverse range, encompassing:

- Making simple HTTP requests to the node (involving the retrieval of blocks/transactions data).

- Retrieving all logs from a newly created block.

- Executing multicall requests for retrieving reserves data on thousands of Uniswap V2 pools.

- Subscribing to streams and decoding pertinent data, especially focusing on pending transactions.

- Crafting Flashbots bundles.

- Simulating and dispatching Flashbots bundles.

Each of these tasks mirrors the routine operations undertaken by MEV searchers, contributing significantly to the efficiency and effectiveness of their operations. Evaluating the performance of our system in the context of these specific tasks is pivotal, offering a nuanced understanding of its capabilities.

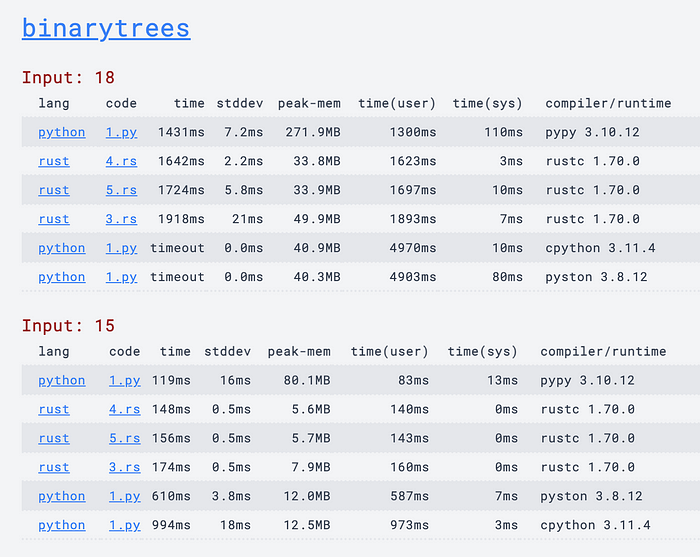

In the initial phases of this endeavor, I grappled with a lack of clarity regarding the potential performance of my MEV bot. Rather than embarking on an exhaustive series of individual benchmarks, I opted for a pragmatic approach, drawing insights from external benchmarks such as those available on websites like "Python VS Rust benchmarks." While these sources provided a degree of amusement and initial insights, it became evident that they only scratched the surface of the entire narrative.

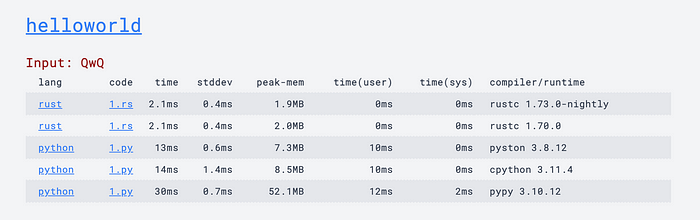

The External Glimpse: Python VS Rust

The benchmarks I initially referred to presented an engaging perspective on the performance of Python and Rust. Noteworthy observations included Rust's remarkable ability to execute a "hello world" command at least six times faster than Python. Conversely, the evaluation also underscored Python's dependence on the compiler/runtime for performance, with occasional instances where it outpaced Rust, albeit with potential timeout concerns.

However, it is crucial to recognize that these benchmarks, while insightful, didn't encapsulate the intricacies of MEV trading tasks. The performance dynamics of the specific functions central to MEV operations require a tailored examination, transcending the broad strokes of general programming language benchmarks.

Closing the Gap: Today's Article Unveils the Untold Story

The crux of today's article is to bridge the existing gap, offering a detailed exploration into the performance of MEV trading methods across Python, JavaScript, and Rust. By directly assessing the performance of our codebase in executing tasks integral to the MEV landscape, we aim to provide readers with a holistic and nuanced understanding of the capabilities and limitations inherent in each language stack.

Stay tuned as we delve into the intricacies of each language's performance in the context of MEV trading, unraveling the untold story behind the benchmarks and shedding light on the factors that truly matter in the world of Miner Extractable Value.

Oh, nice. So Rust can say “hello world” at least 6x times faster than Python. And:

Okay, so Python’s performance relies heavily on the compiler/runtime, and it can sometimes go faster than Rust. But I guess what’s the point if it times out.

All this was very good, but it didn’t really tell us the whole story.

This is precisely the gap that today’s article aims to bridge for you.

Let’s start

Setting the Stage: Unveiling the Arsenal

Before immersing ourselves in the intricacies of benchmarking, let's first lay out the specifications of the formidable workhorse that will steer us through this exploration—the 2019 MacBook Pro. Here's a snapshot of its prowess:

Machine Specifications:

- Processor: 2.6GHz 6-core Intel Core i7

- Memory: 32GB 2667 MHz DDR4

- Storage: A modest 80GB of available space out of the capacious 1TB. A testimony to the accumulation of past projects, a common yet relatable practice.

This configuration paints a picture of a fairly typical laptop—one that serves as the canvas for our programming endeavors.

Blockchain Interaction and Node Connection:

When it comes to interacting with the blockchain, I'll be harnessing the capabilities of my local Ethereum full node. It's worth noting that the interactions won't occur directly from my machine. Instead, a slightly expedited execution time for the showcased samples will be achieved by utilizing the following endpoint to connect with the node from my MacBook:

Node Endpoint: http://192.168.200.182:8545

This strategic approach ensures a seamless interaction with the Ethereum blockchain, paving the way for efficient benchmarking.

Github Repository Update:

To facilitate a hands-on experience for our readers, the mev-templates repository on GitHub has been enriched with the benchmark files. This update enables enthusiasts to follow along and gain a firsthand understanding of the benchmarking process. The repository is accessible at the following link:

mev-templates GitHub Repository

Language Runtime/Compiler Versions:

To maintain transparency and align with best practices, it's imperative to disclose the versions of language runtimes and compilers employed in this benchmarking endeavor:

- Python: 3.10.12

- Node.js: 18.16.0

- Rustc/Cargo: 1.71.1

These versions serve as the bedrock for our benchmarking exercises, ensuring a stable and consistent foundation for evaluating the performance of Python, JavaScript, and Rust in the context of MEV trading.

- With the stage impeccably set, we are poised to embark on a journey of benchmarking, unraveling the nuances of each language's performance in the dynamic realm of Miner Extractable Value (MEV) trading. Stay tuned as we dissect the benchmarks, providing insights that transcend mere numbers and delve into the real-world implications of language selection in MEV scenarios.

How we’ll benchmark performance

To begin, we will initiate the HTTP provider instance using the three languages and observe their respective execution times. Although this concise task may not significantly impact the bots’ overall performance, it serves as an illustrative example of our benchmarking approach.

✅ 1. Creating the HTTP provider

- Javascript (mev-templates/javascript/benchmarks.js):

You can try running the same code by commenting out everything other than the first benchmark task, which is:

// 1. Create HTTP provider

s = microtime.now();

const provider = new ethers.providers.JsonRpcProvider(HTTPS_URL);

took = microtime.now() - s;

console.log(`1. HTTP provider created | Took: ${took} microsec`);Try running the command (from within mev-templates directory):

▶️ cd javascript ▶️ node benchmarks.js

You’ll get an output that looks like:

1. HTTP provider created | Took: 1443 microsec

1443 µs (microsecond) = 1.443 ms (millisecond) = 0.001443 s (second)

Executing this code once doesn’t comprehensively consider network connectivity. Therefore, I will replicate the same code segment multiple times and calculate the average value to establish a more reliable final result.

Interestingly, after performing 30 iterations, I have made an intriguing observation:

1. HTTP provider created | Took: 1124 microsec 1. HTTP provider created | Took: 33 microsec 1. HTTP provider created | Took: 27 microsec 1. HTTP provider created | Took: 23 microsec 1. HTTP provider created | Took: 40 microsec ...

the initial creation takes the longest, and the creation time goes down drastically afterwards. I’ll simply remove the first try, and average out the next, which results to: 60 ~ 70 µs.

- Python (mev-templates/python/benchmarks.py):

For Python, we do the same thing as above, we run the below:

###########################

# 1️⃣ Create HTTP provider #

###########################

s = time.time()

w3 = Web3(Web3.HTTPProvider(HTTPS_URL))

took = (time.time() - s) * 1000000

print(f'1. HTTP provider created | Took: {took} microsec')by running (from mev-templates directory):

▶️ cd python ▶️ python benchmarks.py

We’ll try running this 30 times as well:

1. HTTP provider created | Took: 1351.1180877685547 microsec 1. HTTP provider created | Took: 1018.0473327636719 microsec 1. HTTP provider created | Took: 1032.114028930664 microsec 1. HTTP provider created | Took: 936.9850158691406 microsec 1. HTTP provider created | Took: 950.5748748779297 microsec ...

The notable contrast likely stems from the distinct implementations. The average time taken for the provider creation code in Python is approximately: 1100 µs.

However, there’s no need to feel disheartened yet. This is just the initial phase, and it involves the least critical piece of code that we’ll be benchmarking. In the majority of scenarios, the provider will be established prior to jumping into the core logic of our strategy. As a result, this particular code is unlikely to be executed while the bots are actively running.

- Rust (mev-templates/rust/benches/benchmarks.rs):

Let’s try running Rust code. Leave the code below and comment out the rest:

// 1. Create HTTP provider

let s = Instant::now();

let client = Provider::<Http>::try_from(env.https_url.clone()).unwrap();

let client = Arc::new(client);

let took = s.elapsed().as_micros();

println!("1. HTTP provider created | Took: {:?} microsec", took);We’ll run this 30 times just like before:

1. HTTP provider created | Took: 94 microsec 1. HTTP provider created | Took: 7 microsec 1. HTTP provider created | Took: 5 microsec 1. HTTP provider created | Took: 5 microsec 1. HTTP provider created | Took: 4 microsec ...

Okay, that was unexpected. I expected Rust to be extremely fast, but this is still surprising. The average time for Rust is: 8 µs.

⚡️ Now that you have a grasp of the benchmarking process, I won’t delve into explaining every task in meticulous detail, but instead will be providing the results for each task looking like this:

- Javascript: 65 µs

- Python: 1100 µs (= 1.1 ms)

- Rust: 8 µs

Let’s dive into the more interesting tasks now.

✅ 2. Get Block information

- Javascript: 18 ms (millisecond)

s = microtime.now();

let block = await provider.getBlock('latest');

took = (microtime.now() - s) / 1000;

console.log(`2. New block: #${block.number} | Took: ${took} ms`);- Python: 12 ms (millisecond)

s = time.time()

block = w3.eth.get_block('latest')

took = (time.time() - s) * 1000

print(f'2. New block: #{block["number"]} | Took: {took} ms')- Rust: 5 ms (millisecond)

use tokio::runtime::Runtime;

let rt = Runtime::new().unwrap();

let task = async {

let s = Instant::now();

let block = client.clone().get_block(BlockNumber::Latest).await.unwrap();

let took = s.elapsed().as_millis();

println!(

"2. New block: #{:?} | Took: {:?} ms",

block.unwrap().number.unwrap(),

took

);

};

rt.block_on(task);✅ 3. Multicall request of 250 contract storage reads

- Javascript: 163 ms (= 0.16 s)

// 5. Multicall test: calling 250 requests using multicall

let reserves;

s = microtime.now()

reserves = await getUniswapV2Reserves(HTTPS_URL, Object.keys(pools).slice(0, 250));

took = (microtime.now() - s) / 1000;

console.log(`5. Multicall result for ${Object.keys(reserves).length} | Took: ${took} ms`);- Python: 124 ms (= 0.124 s)

# 5️. Multicall test: calling 250 requests using multicall

s = time.time()

reserves = get_uniswap_v2_reserves(HTTPS_URL, list(pools.keys())[0:250])

took = (time.time() - s) * 1000

print(f'5. Multicall result for {len(reserves)} | Took: {took} ms')- Rust: 80 ms (= 0.08 s)

let task = async {

let factory_addresses = vec!["0xC0AEe478e3658e2610c5F7A4A2E1777cE9e4f2Ac"];

let factory_blocks = vec![10794229u64];

let pools = load_all_pools_from_v2(env.wss_url.clone(), factory_addresses, factory_blocks)

.await

.unwrap();

let s = Instant::now();

let reserves = get_uniswap_v2_reserves(env.https_url.clone(), pools[0..250].to_vec())

.await

.unwrap();

let took = s.elapsed().as_millis();

println!(

"5. Multicall result for {:?} | Took: {:?} ms",

reserves.len(),

took

);

};

rt.block_on(task);✅ 4. Batch multicall request (3,774 calls)

- Javascript: 1100 ms (= 1.1 s)

s = microtime.now();

reserves = await batchGetUniswapV2Reserves(HTTPS_URL, Object.keys(pools));

took = (microtime.now() - s) / 1000;

console.log(`5. Bulk multicall result for ${Object.keys(reserves).length} | Took: ${took} ms`);- Python: 1600 ms (= 1.6 s)

This is done using multiprocessing, which is why it can be slower than the Javascript version. (A proper comparison would require Python to do the same thing that Javascript/Rust versions are doing.)

s = time.time()

reserves = batch_get_uniswap_v2_reserves(HTTPS_URL, pools)

took = (time.time() - s) * 1000

print(f'5. Bulk multicall result for {len(reserves)} | Took: {took} ms')- Rust: 170 ms (= 0.17 s)

let task = async {

let factory_addresses = vec!["0xC0AEe478e3658e2610c5F7A4A2E1777cE9e4f2Ac"];

let factory_blocks = vec![10794229u64];

let pools = load_all_pools_from_v2(env.wss_url.clone(), factory_addresses, factory_blocks)

.await

.unwrap();

let s = Instant::now();

let reserves = batch_get_uniswap_v2_reserves(env.https_url.clone(), pools).await;

let took = s.elapsed().as_millis();

println!(

"5. Bulk multicall result for {:?} | Took: {:?} ms",

reserves.len(),

took

);

};

rt.block_on(task);✅ 5. Pending transactions stream

This time, we’re going to try something new. We’ll open websocket connections using each template, and start streaming pending transactions data from the mempool (=txpool). Also, to compare the time that it takes for each language to:

- receive data,

- decode data,

- retrieve transactions data with eth_getTransactionByHash,

basically everything required for us to deal with the pending transactions, we’ll log the real-time data to csv files, and in the end compare the time logged from all the templates and see if there’s a noticeable difference here.

- Javascript:

const { streamPendingTransactions } = require('./src/streams');

function loggingEventHandler(eventEmitter) {

const provider = new ethers.providers.JsonRpcProvider(HTTPS_URL);

// Rust pending transaction stream retrieves the full transaction by tx hash.

// So we have to do the same thing for JS code.

let now;

let benchmarkFile = path.join(__dirname, 'benches', '.benchmark.csv');

eventEmitter.on('event', async (event) => {

if (event.type == 'pendingTx') {

try {

let tx = await provider.getTransaction(event.txHash);

now = microtime.now();

let row = [tx.hash, now].join(',') + '\n';

fs.appendFileSync(benchmarkFile, row, { encoding: 'utf-8' });

} catch {

// pass

}

}

});

}

async function benchmarkStreams(streamFunc, handlerFunc, runTime) {

let eventEmitter = new EventEmitter();

const wss = await streamFunc(WSS_URL, eventEmitter);

await handlerFunc(eventEmitter);

setTimeout(async () => {

await wss.destroy();

eventEmitter.removeAllListeners();

}, runTime * 1000);

}

async function benchmarFunction() {

// ...

let streamFunc;

let handlerFunc;

// 6. Pending transaction async stream

streamFunc = streamPendingTransactions;

handlerFunc = loggingEventHandler;

console.log('6. Logging receive time for pending transaction streams. Wait 60 seconds...');

await benchmarkStreams(streamFunc, handlerFunc, 60);

// ...

}- Python:

async def logging_event_handler(event_queue: aioprocessing.AioQueue):

w3 = Web3(Web3.HTTPProvider(HTTPS_URL))

f = open(BENCHMARK_DIR / '.benchmark.csv', 'w', newline='')

wr = csv.writer(f)

while True:

try:

data = await event_queue.coro_get()

if data['type'] == 'pending_tx':

_ = w3.eth.get_transaction(data['tx_hash'])

now = datetime.datetime.now().timestamp() * 1000000

wr.writerow([data['tx_hash'], int(now)])

except Exception as _:

break

async def benchmark_streams(stream_func: Callable,

handler_func: Callable,

run_time: int):

event_queue = aioprocessing.AioQueue()

stream_task = asyncio.create_task(stream_func(WSS_URL, event_queue, False))

handler_task = asyncio.create_task(handler_func(event_queue))

await asyncio.sleep(run_time)

event_queue.put(0)

stream_task.cancel()

handler_task.cancel()

#######################################

# 6️⃣ Pending transaction async stream #

#######################################

stream_func = stream_pending_transactions

handler_func = logging_event_handler

print('6. Logging receive time for pending transaction streams. Wait 60 seconds...')

asyncio.run(benchmark_streams(stream_func, handler_func, 60))- Rust:

pub async fn logging_event_handler(_: Arc<Provider<Ws>>, event_sender: Sender<Event>) {

let benchmark_file = Path::new("benches/.benchmark.csv");

let mut writer = csv::Writer::from_path(benchmark_file).unwrap();

let mut event_receiver = event_sender.subscribe();

loop {

match event_receiver.recv().await {

Ok(event) => match event {

Event::Block(_) => {}

Event::PendingTx(tx) => {

let now = Local::now().timestamp_micros();

writer.serialize((tx.hash, now)).unwrap();

}

},

Err(_) => {}

}

}

}

let task = async {

let ws = Ws::connect(env.wss_url.clone()).await.unwrap();

let provider = Arc::new(Provider::new(ws));

let (event_sender, _): (Sender<Event>, _) = broadcast::channel(512);

let mut set = JoinSet::new();

// try running the stream for n seconds

set.spawn(tokio::time::timeout(

std::time::Duration::from_secs(60),

stream_pending_transactions(provider.clone(), event_sender.clone()),

));

set.spawn(tokio::time::timeout(

std::time::Duration::from_secs(60),

logging_event_handler(provider.clone(), event_sender.clone()),

));

println!("6. Logging receive time for pending transaction streams. Wait 60 seconds...");

while let Some(res) = set.join_next().await {

println!("Closed: {:?}", res);

}

};

rt.block_on(task);Running these streams for 60 seconds will result in:

- mev-templates/javascript/benches/.benchmark.csv

- mev-templates/python/benches/.benchmark.csv

- mev-templates/rust/benches/.benchmark.csv

that have logs like below:

0x54d0df28b42c86a98ee20d3b27d24067f9e87e5be35eeb46b7935f329cdfdb7e,1693053836703765

this tells us the pending transaction hash we received, and at what time we were done with decoding the transaction we retrieved by calling eth_getTransactionByHash.

We now run:

import os

import pandas as pd

from pathlib import Path

_DIR = Path(os.path.dirname(os.path.abspath(__file__)))

def df_fmt(df: pd.DataFrame, name: str) -> pd.DataFrame:

df.columns = ['tx_hash', name]

df['tx_hash'] = df['tx_hash'].apply(lambda x: x.lower())

return df

if __name__ == '__main__':

js = df_fmt(pd.read_csv(_DIR / 'javascript/benches/.benchmark.csv', header=None), 'js')

py = df_fmt(pd.read_csv(_DIR / 'python/benches/.benchmark.csv', header=None), 'py')

rs = df_fmt(pd.read_csv(_DIR / 'rust/benches/.benchmark.csv', header=None), 'rs')

bench = js.merge(py, on='tx_hash').merge(rs, on='tx_hash')

bench['py - rs'] = bench['py'] - bench['rs']

bench['js - rs'] = bench['js'] - bench['rs']

bench['py - js'] = bench['py'] - bench['js']

bench = bench.drop_duplicates(['tx_hash'], keep='last')

bench.to_csv(_DIR / '.benchmark.csv', index=None)to see how these templates performed.

🛑 The results for this will keep being investigated, so there may changes to the results in the future. The Python version turned out to be very unstable, because it was using the sync version of HTTProvider instead of the async version.

The results were quite interesting.

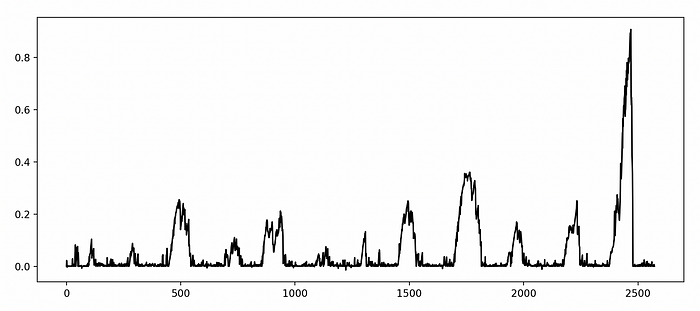

- Comparing Python / Rust in seconds:

X: pending tx id / Y: Python latency in seconds (compared to Rust)

X: pending tx id / Y: Python latency in seconds (compared to Rust)

It’s evident that, in the majority of instances, Python experiences slower access to pending transaction data compared to Rust. Nonetheless, intermittent spikes are observable, and these could potentially arise from scenarios where both the JavaScript and Rust versions simultaneously dispatch eth_getTransactionByHash requests to my Geth node. Alternatively, these variations might emerge due to the asynchronous nature of eth_getTransactionByHash calls in the two other versions, which is absent in Python. (This aspect will undergo further scrutiny, as the Python version's outcomes don't appear stable enough to warrant in-depth discussion at this point.)

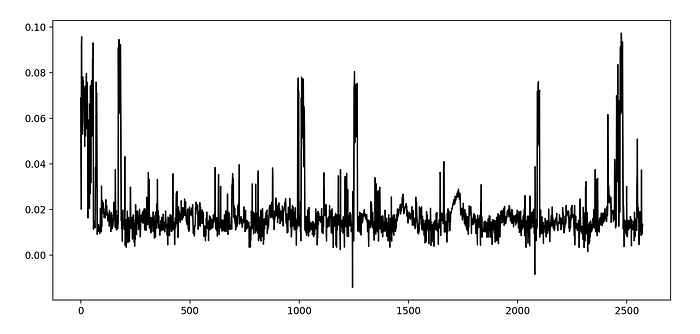

2. Comparing Javascript / Rust in seconds: X: pending tx id / Y: JS latency in seconds (compared to Rust)

X: pending tx id / Y: JS latency in seconds (compared to Rust)

The time difference observed between Javascript and Rust outcomes is notably more reliable. It’s apparent that, on average, Javascript streams encounter a delay of 0.018 seconds (18 ms) in accessing the same data compared to the Rust version.

However, recall that it took:

- Javascript: 18 ms

- Python: 12 ms

- Rust: 5 ms

to obtain block information. Presuming a similar scenario with eth_getTransactionByHash, it's apparent that a significant portion of the latency arises from this particular task.

👉 Benchmarking websocket streams was very difficult to pull off. But we kind of get the picture here. The network latency won’t be too different for either one of these languages, so we expect to see a few ms difference between Rust and the others, and that’s what we see here with Javascript / Rust.

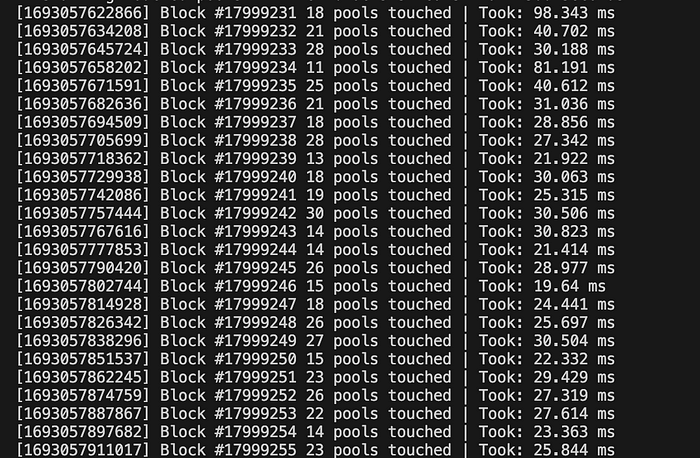

✅ 6. Retrieving touched pools on new block update

We’re going to try out something new again. This time, our focus shifts to initiating newHeads subscriptions from each template. Upon receiving data about a newly created block, we will extract all the logs associated with it. From these logs, we will narrow down the selection to those featuring the Sync event, allowing us to access the current reserves data from the relevant pools on Sushiswap V2.

🤙 We’ll also assume that the Python and Javascript versions experience a 20 ms delay in acquiring subscription data compared to the Rust version.

- Javascript: 32 ms

let s = microtime.now();

let reserves = await getTouchedPoolReserves(provider, event.blockNumber);

let took = (microtime.now() - s) / 1000;

let now = Date.now();

console.log(`[${now}] Block #${event.blockNumber} ${Object.keys(reserves).length} pools touched | Took: ${took} ms`);Running this along with the newHeads stream will result in something like:

- Python: 20 ms

s = time.time()

block_number = data['block_number']

reserves = get_touched_pool_reserves(w3, block_number)

took = (time.time() - s) * 1000

now = datetime.datetime.now()

print(f'[{now}] Block #{block_number} {len(reserves)} pools touched | Took: {took} ms')- Rust: 10 ms

pub async fn touched_pools_event_handler(provider: Arc<Provider<Ws>>, event_sender: Sender<Event>) {

let mut event_receiver = event_sender.subscribe();

loop {

match event_receiver.recv().await {

Ok(event) => match event {

Event::Block(block) => {

let s = Instant::now();

match get_touched_pool_reserves(provider.clone(), block.block_number).await {

Ok(reserves) => {

let took = s.elapsed().as_millis();

let now = Instant::now();

println!(

"[{:?}] Block #{:?} {:?} pools touched | Took: {:?} ms",

now,

block.block_number,

reserves.len(),

took

);

}

Err(_) => {}

}

}

Event::PendingTx(_) => {}

},

Err(_) => {}

}

}

}✅ 7. Sending bundles to Flashbots

If we rely on the mempool for transaction submissions, the time taken will be contingent on the network status of our local node.

However, using Flashbots is different. They offer a private RPC endpoint for users, and it’s the network connectivity here that becomes our focus.

Another point to make here is that, people heavily rely on the open-source Flashbots libraries available to them, which are:

- Javascript: https://github.com/flashbots/ethers-provider-flashbots-bundle (470 stars, 167 forks)

- Python: https://github.com/flashbots/web3-flashbots (346 stars, 182 forks)

- Rust: https://github.com/onbjerg/ethers-flashbots (347 stars, 88 forks)

This is why, in benchmarking the time required to send bundles to Flashbots, I took their examples and used them in our benchmarking, assuming that most people will readily take those examples and use them (much like myself 😙)

Sending bundles to Flashbots requires everyone to take three steps:

- Signing the desired bundles,

- Simulating the bundles on Flashbots’ server,

- Finally, sending the simulated bundles to Flashbots.

We shall examine the time taken for these three steps by sending a bundle that transfers 0.001 ETH to myself. The fee attached to this bundle will be deliberately set at a very low but functional level — sufficient to pass all simulations but intentionally not chosen by the builders.

- Javascript: 1.3 s

// 10. Sending Flashbots bundles

block = await provider.getBlock('latest');

blockNumber = block.number;

let nextBaseFee = calculateNextBlockBaseFee(block);

maxPriorityFeePerGas = BigInt(1);

maxFeePerGas = nextBaseFee + maxPriorityFeePerGas;

// Create/sign bundle

s = microtime.now();

let common = await bundler._common_fields();

amountIn = BigInt(parseInt(0.001 * 10 ** 18));

let tx = {

...common,

to: bundler.sender.address,

from: bundler.sender.address,

value: amountIn,

data: '0x',

gasLimit: BigInt(30000),

maxFeePerGas,

maxPriorityFeePerGas,

};

bundle = await bundler.toBundle(tx);

signedBundle = await bundler.flashbots.signBundle(bundle);

took = (microtime.now() - s) / 1000;

console.log(`- Creating bundle took: ${took} ms`);

// Simulating bundle

s = microtime.now();

const simulation = await bundler.flashbots.simulate(signedBundle, blockNumber);

if ('error' in simulation) {

console.warn(`Simulation Error: ${simulation.error.message}`)

return '';

} else {

console.log(`Simulation Success: ${JSON.stringify(simulation, null, 2)}`)

}

took = (microtime.now() - s) / 1000;

console.log(`- Running simulation took: ${took} ms`);

// Sending bundle

s = microtime.now();

const targetBlock = blockNumber + 1;

const replacementUuid = uuid.v4();

const bundleSubmission = await bundler.flashbots.sendRawBundle(signedBundle, targetBlock, { replacementUuid });

if ('error' in bundleSubmission) {

throw new Error(bundleSubmission.error.message)

}

took = (microtime.now() - s) / 1000;

console.log(`10. Sending Flashbots bundle ${bundleSubmission.bundleHash} | Took: ${took} ms`);- Creating bundle: 20 ms

- Simulating bundle: 0.65 s

- Sending bundle: 0.65 s

- Python: 0.56 s

# Create/sign bundle

s = time.time()

common = bundler._common_fields

amount_in = int(0.001 * 10 ** 18)

tx = {

**common,

'to': bundler.sender.address,

'from': bundler.sender.address,

'value': amount_in,

'data': '0x',

'gas': 30000,

'maxFeePerGas': max_fee_per_gas,

'maxPriorityFeePerGas': max_priority_fee_per_gas,

}

bundle = bundler.to_bundle(tx)

took = (time.time() - s) * 1000

print(f'- Creating bundle took: {took} ms')

# Simulating bundle

s = time.time()

flashbots: Flashbots = bundler.w3.flashbots

try:

simulated = flashbots.simulate(bundle, block_number)

except Exception as e:

print('Simulation error', e)

took = (time.time() - s) * 1000

print(f'- Running simulation took: {took} ms')

# print(simulated)

# Sending bundle

s = time.time()

replacement_uuid = str(uuid4())

response: FlashbotsBundleResponse = flashbots.send_bundle(

bundle,

target_block_number=block_number + 1,

opts={'replacementUuid': replacement_uuid},

)

took = (time.time() - s) * 1000

total_took = (time.time() - _s) * 1000

print(f'10. Sending Flashbots bundle {response.bundle_hash().hex()} | Took: {took} ms')- Creating bundle: 14 ms

- Simulating bundle: 0.3 s

- Sending bundle: 0.25 s

- Rust: 1.3 s

let bundler = Bundler::new();

let block = bundler

.provider

.get_block(BlockNumber::Latest)

.await

.unwrap()

.unwrap();

let next_base_fee = U256::from(calculate_next_block_base_fee(

block.gas_used.as_u64(),

block.gas_limit.as_u64(),

block.base_fee_per_gas.unwrap_or_default().as_u64(),

));

let max_priority_fee_per_gas = U256::from(1);

let max_fee_per_gas = next_base_fee + max_priority_fee_per_gas;

// Create/sign bundle

let s = Instant::now();

let common = bundler._common_fields().await.unwrap();

let to = NameOrAddress::Address(common.0);

let amount_in = U256::from(1) * U256::from(10).pow(U256::from(15)); // 0.001

let tx = Eip1559TransactionRequest {

to: Some(to),

from: Some(common.0),

data: Some(Bytes(bytes::Bytes::new())),

value: Some(amount_in),

chain_id: Some(common.2),

max_priority_fee_per_gas: Some(max_priority_fee_per_gas),

max_fee_per_gas: Some(max_fee_per_gas),

gas: Some(U256::from(30000)),

nonce: Some(common.1),

access_list: AccessList::default(),

};

let signed_tx = bundler.sign_tx(tx).await.unwrap();

let bundle = bundler.to_bundle(vec![signed_tx], block.number.unwrap());

let took = s.elapsed().as_millis();

println!("- Creating bundle took: {:?} ms", took);

// Simulating bundle

let s = Instant::now();

let simulated = bundler

.flashbots

.inner()

.simulate_bundle(&bundle)

.await

.unwrap();

for tx in &simulated.transactions {

if let Some(e) = &tx.error {

println!("Simulation error: {e:?}");

}

if let Some(r) = &tx.revert {

println!("Simulation revert: {r:?}");

}

}

let took = s.elapsed().as_millis();

println!("- Running simulation took: {:?} ms", took);

// Sending bundle

let s = Instant::now();

let pending_bundle = bundler

.flashbots

.inner()

.send_bundle(&bundle)

.await

.unwrap();

let took = s.elapsed().as_millis();

println!(

"10. Sending Flashbots bundle ({:?}) | Took: {:?} ms",

pending_bundle.bundle_hash, took

);- Creating bundle: 8 ms

- Simulating bundle: 1.1 s

- Sending bundle: 0.25 s

Summing Up: Unveiling the Performance Metrics

In this comprehensive exploration of MEV bot functionalities, we delved into the core operations and subjected them to rigorous benchmarking across three distinct programming languages. Let's distill the extensive findings into a succinct summary:

- Getting Block Information:

- JavaScript (JS): 18 ms

- Python (PY): 12 ms

- Rust (RS): 5 ms

- Single Batch of Multicall Request:

- JS: 163 ms

- PY: 124 ms

- RS: 80 ms

- Batch Multicall Requests:

- JS: 1100 ms

- PY: 1600 ms

- RS: 170 ms

- Retrieve All Events in a Block and Filter by Sync Event:

- JS: 32 ms

- PY: 20 ms

- RS: 10 ms

- Simulating/Sending Bundles to Flashbots:

- JS: 1.3 s

- PY: 0.56 s

- RS: 1.3 s

The performance metrics unfurl a narrative where Rust emerges as a standout performer, showcasing remarkable agility in handling diverse tasks, especially those entailing asynchronous operations and CPU-bound processes.

However, a perplexing aspect surfaces when scrutinizing the Flashbots simulation, which evidently stands out as a notable bottleneck for Rust. Despite multiple tests conducted over the past few days, the results consistently echo this pattern. Intriguingly, eliminating the simulation component aligns Rust with the Python version.

This enigma surrounding the Flashbots segment beckons for collaborative problem-solving. If any adept minds uncover enhancements or optimizations for the existing implementation, I extend an open invitation to share insights. The quest for refining MEV bot functionalities is an ongoing journey, and community collaboration holds the key to unlocking new frontiers of efficiency. Your input is not only welcome but eagerly anticipated!