Emerging Artificial Intelligence

ChatGPT has become famous overnight since its launch in November 2022. Millions of people are currently using this emerging artificial intelligence tool. ChatGPT is a conversational artificial intelligence chatbot developed by San Francisco startup OpenAI. As a messaging tool, ChatGPT aims to converse and interact with humans as naturally as possible. ChatGPT has many functions, not only can answer questions, explain complex topics, but even create content such as articles, social platform posts and even poems.

In just a few months, the iterative update of ChatGPT has also stimulated many Internet peers to join this "AI research and development competition". On March 14, OpenAI publicly released the large-scale multimodal model GPT-4. Compared with the model used by ChatGPT, GPT-4 can not only process image content, but also improve the accuracy of responses. A week after the release of GPT-4, Google followed up with a blog post announcing that it would allow some access to the chatbot Bard. On March 16, Microsoft held an AI conference and announced that it will launch an artificial intelligence service called Copilot, which will embed ChatGPT homologous technology into office software to assist users in their work in the office. On March 22, according to data from Data.ai, the download volume of the new version of Microsoft Bing embedded with ChatGPT jumped 8 times after its release in February. On the 23rd, ChatGPT ushered in a major update. Not only can it be connected to the Internet to obtain the latest information, but it can also interact with more than 5,000 third-party plug-ins to realize functions such as planning travel and comparing orders. On March 31, Sundar Pichai, CEO of Google and its parent company Alphabet, revealed in a podcast program that Bard will access a large-scale language model PaLM with larger parameters, and its mathematical and logical capabilities will be improved.

Many industry leaders, artificial intelligence researchers, and experts and scholars believe that AI products represented by ChatGPT are a fundamental technological shift, which is no less important than the birth of web browsers or iPhones. However, the rapid development of AI products such as ChatGPT has also brought many security risks and risk concerns.

The process of training ChatGPT is based on the intake of human feedback and massive public data. Therefore, when using ChatGPT for creation, it may be suspected of using content without the consent of the original author, which may cause ownership and copyright disputes. Many educators are also worried about plagiarism, because students can more easily use artificial intelligence to write papers. Furthermore, the privacy of submitted content is not well protected when interacting with ChatGPT. On March 20, the ChatGPT platform experienced loss of user dialogue data and payment service payment information. On the 23rd, OpenAI CEO Sam Altman publicly apologized and admitted that there was an error in the open source library, which caused some users' chat records with ChatGPT to be leaked.

Where does the "rabbit hole" of artificial intelligence lead?

Sharon Goldman, a senior writer in the field of artificial intelligence, wrote on the American technology website VentureBeat that after leaving the laboratory, artificial intelligence has fully entered the cultural trend of the times. While it brings attractive opportunities, it also brings new opportunities to the real world. The society is dangerous, and human beings are entering a "weird new world" where artificial intelligence power and politics are intertwined. Every field of artificial intelligence is like a rabbit hole in Alice in Wonderland, leading to the unknown.

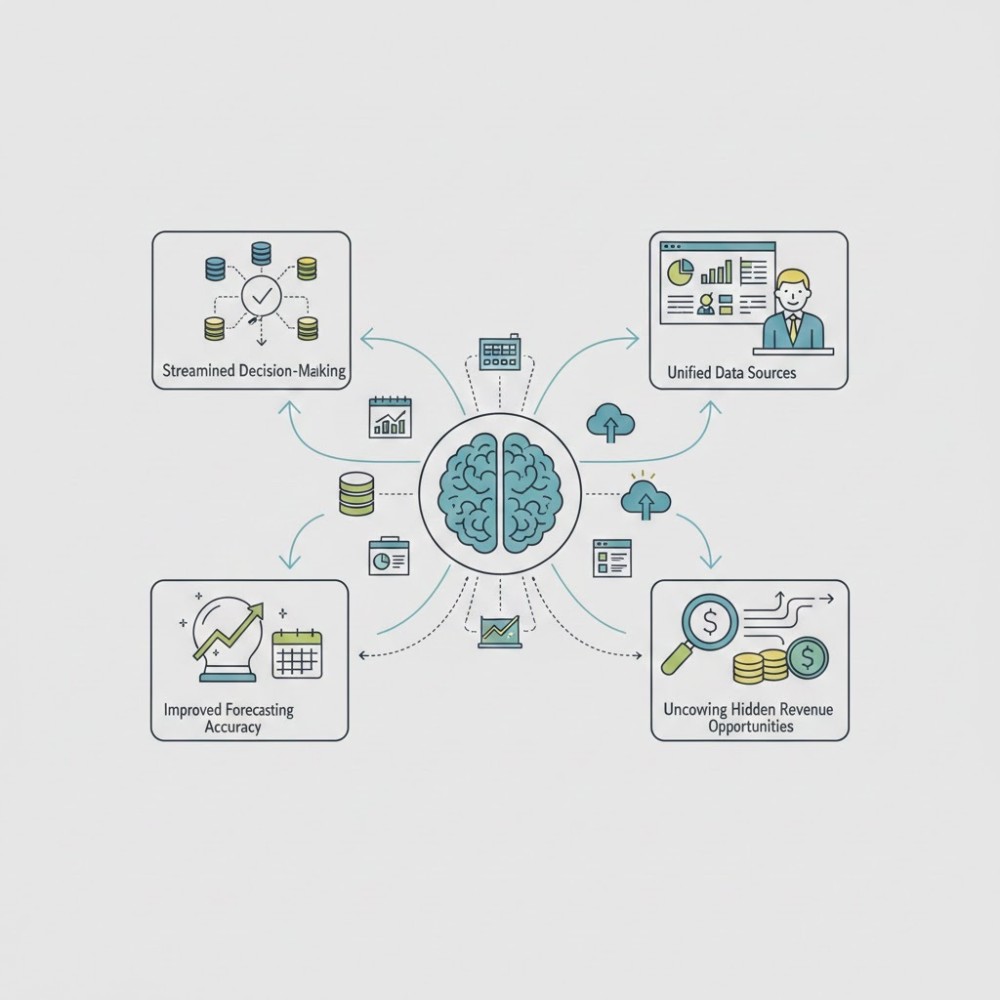

Goldman is keen to point out that there is a clear disconnect between applied AI and AI prediction. Business executives are not worried about AI destroying humans, they only care about whether AI and machine learning can improve worker productivity and improve customer service. However, from OpenAI and Anthropic to DeepMind and top companies throughout Silicon Valley, there are still many discussions based on TESCREAL (referring to transhumanism, extraversion, singularity, cosmism, rationalism, effective altruism and long-termism). A large online community of rationalists and effective altruists, assembled by AI researcher Eliezer Yudkowski, firmly believes that the existence of artificial intelligence is a risk. The community has many researchers from top AI labs participating.

Venkata Sunian, former assistant director of the White House Office of Science and Technology Policy, believes that "a lot of what is masquerading as a technical discussion has a huge political agenda behind it." The discussion around artificial intelligence has shifted from technology and science to politics and power.

The Los Angeles Times took a different view, saying that OpenAI CEO Sam Altman, while declaring that he was "a bit scared" of the company's AI technology, was "helping to build and roll out the technology as widely as possible to gain profit". According to the report, the logic behind a CEO or executive of a high-profile technology company repeatedly exaggerating concerns about the products he is building and selling on the public stage can only be "about the terrifying power of artificial intelligence." Apocalypticism helps his marketing strategy."

Regarding the divergent future of artificial intelligence, Calvillo, director of emerging technologies at the Aspen Institute, pointed out that people's predictions about the future are often full of disagreements, and we should try to focus less on the differences and focus more on the consistent places—for example, Many who disagree with artificial general intelligence (AGI) agree that regulation is necessary and that AI developers should take more responsibility.