Bot Epidemic: Combatting Web 3.0 Social Platform Manipulation

In the ever-evolving landscape of the internet, the emergence of Web 3.0 brings with it promises of decentralized, community-driven social platforms. However, as with any new technology, challenges arise, and one of the most pressing issues facing these platforms is the infiltration of bots. These automated accounts can manipulate discussions, spread misinformation, and undermine the integrity of online interactions. As Web 3.0 seeks to prioritize transparency, inclusivity, and authenticity, the battle against bots becomes crucial in shaping the future of online social interactions.

Understanding the Bot Epidemic

Bots are software programs designed to perform specific tasks on the internet, often without human intervention. In the context of social platforms, bots can be programmed to mimic human behavior, creating fake accounts, generating spam, amplifying certain messages, or even engaging in coordinated attacks. Their presence undermines the principles of genuine engagement and distorts the online discourse.

The Impact on Web 3.0 Social Platforms

For Web 3.0 social platforms aiming to foster genuine connections and meaningful interactions, the presence of bots poses a significant threat. Bots can artificially inflate metrics such as likes, shares, and comments, creating a false sense of popularity or relevance for certain content. This not only deceives users but also skews algorithms, leading to the amplification of low-quality or misleading content.

Moreover, bots can be used to manipulate public opinion, spread propaganda, or sway political discourse. In an era where trust in online information is already fragile, the proliferation of bots further erodes confidence in the authenticity of social platforms.

Strategies for Bot Elimination

Combatting the bot epidemic requires a multi-faceted approach that combines technological solutions, community engagement, and platform governance. Here are some strategies that Web 3.0 social platforms can employ:

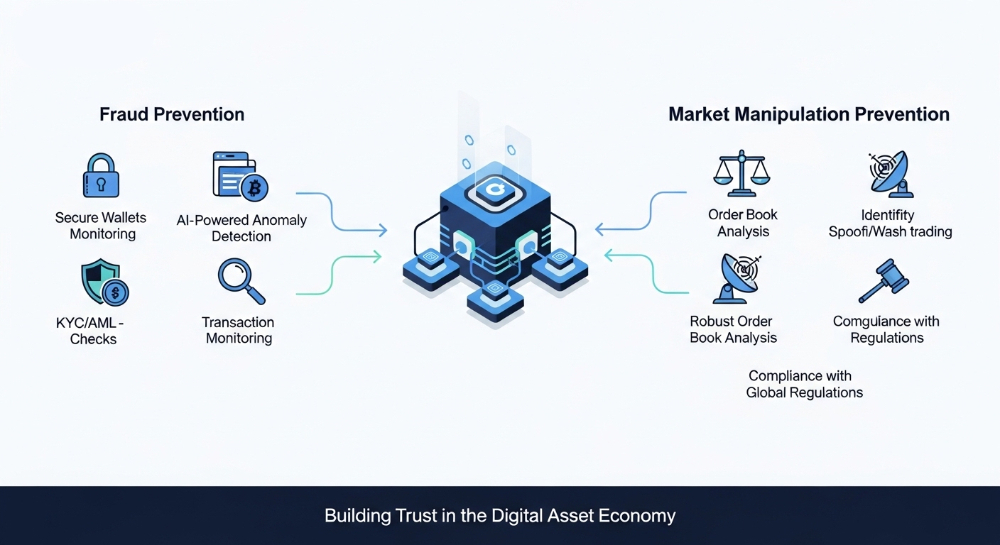

- Advanced Detection Algorithms: Leveraging artificial intelligence and machine learning algorithms to identify patterns indicative of bot behavior. These algorithms can analyze user activity, content engagement, and network connections to flag suspicious accounts for further investigation.

- CAPTCHA and Authentication Mechanisms: Implementing CAPTCHA challenges and multi-factor authentication processes during account creation to verify the identity of users and deter automated bot registration.

- Community Reporting Mechanisms: Empowering users to report suspicious accounts or activities, allowing for swift action by platform moderators to investigate and remove offending bots.

- Decentralized Governance: Embracing the principles of decentralization, Web 3.0 platforms can distribute moderation responsibilities across the community, ensuring that no single entity has unchecked control over content regulation.

- Transparency and Accountability: Providing users with visibility into the platform's moderation processes, including how bots are detected and mitigated. Establishing clear guidelines and enforcement mechanisms can foster trust and accountability within the community.

The Road Ahead

Eliminating bots from Web 3.0 social platforms is not a one-time effort but an ongoing battle that requires vigilance and collaboration. As these platforms continue to evolve, so too must our strategies for combating manipulation and preserving the integrity of online discourse.

By prioritizing transparency, community empowerment, and technological innovation, Web 3.0 social platforms can create environments where genuine connections thrive, and authentic interactions flourish. In the fight against bots, the collective efforts of platform developers, users, and stakeholders are essential in shaping a healthier and more resilient online ecosystem.