be your self

Usually, the fastest approach is to store each hash state in one or more SIMD registers. The multi-block function executes SIMD instructions that applies each step of the computation to all in-flight hash states.

Ascending to the next layer, the multi-message engine then schedules inputs to the multi-block function. This scheduler can get more complicated than it seems, especially with variable-length messages. It involves initialization of new hash states, appending pieces of message inputs, finalizing output values, and so on.

The relatively simple Merkle–Damgård construction powering SHA-256 makes scheduling manageable. The block function is invoked exactly once per 64 bytes of input data, plus optionally one additional time during finalization.

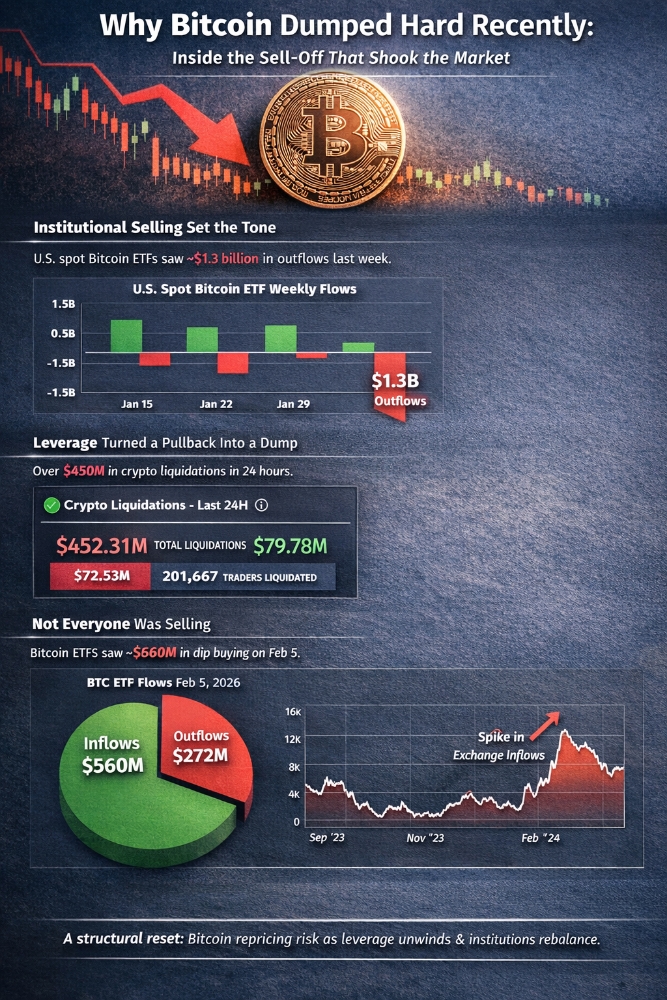

Indeed, there exist open-source implementations of multi-message SHA-256: minio/sha256-simd 1 in Go, and fd_sha256 in C. Both feature AVX2 and AVX512 backends, in the realm of ~10 Gbps (Zen 2, AVX2) and ~20 Gbps (Icelake Server, AVX512) per-core peak throughput respectively on recent x86_64 CPUs. Peak throughput is reached for message sizes (64*(n-1))+55.

The BLAKE3 hash function is implemented C, Rust, and Go. All three are highly optimized toward large message hashing. But currently, none support parallel hashing of small independent messages.

The following compares fd_sha256 vs BLAKE3-C throughput for multi-message hashing in AVX512 mode. The input size is (64*n)-9 for SHA-256 and 64*n for BLAKE3 (to account for SHA’s padding).